📄 Hongliu Cao (2024) Recent Advances in Text Embedding: A Comprehensive Review of Top-Performing Methods on the MTEB Benchmark (arX⠶2406.01607v1 [cs⠶CL])

Part 1 of a series on universal text embeddings.

In this section, we cover:

- Introduction to text embeddings in 4 'eras'

- Large scale pretraining

- LLM-based text embeddings

- Evaluation and benchmarks

- Challenges in the field and future directions

- 1: Introduction to Text Embedding Models

- 2: Overview of the Four Eras of Text Embeddings

- 3: The First Era: Early Distributed Representations (Word Embeddings)

- 4: The Second Era: Contextual Word Representations

- 5: The Third Era: Sentence-Level Representation Learning

- 6: The Fourth Era: Universal Text Embeddings

- 7: Advances in the Fourth Era: Large-Scale Pretrained Models

- 8: Subset of the Fourth Era: LLM-Based Text Embeddings

- 9: Applications of Universal Text Embeddings

- 10: Evaluation and Benchmarks: The Role of MTEB

- 11: Challenges and Future Directions

- 11.1: Task and Domain Coverage Gaps

- 11.2: Efficiency and Practicality

- 11.3: Better Use of Instructions and Context

- 11.4: New Similarity Measures and Objectives

- 11.5: Handling Longer Texts and Compositionality

- 11.6: Continual Learning and Adaptation

- 11.7: Integration with Knowledge and Reasoning

- 11.8: Evaluation Improvements

- 12: Conclusion and Summary

1: Introduction to Text Embedding Models

Text embedding models are techniques that convert pieces of text – whether words, sentences, or documents – into numerical vector representations. An embedding is typically a fixed-length, low-dimensional dense vector that captures the essence or meaning of the text (⇒). The goal is for texts with similar meaning to have vectors that are near each other in this vector space (⇒). These representations serve as compact features that algorithms can efficiently use for various language processing tasks. In modern NLP and machine learning, embeddings act as the backbone for understanding text content: rather than comparing raw words (which are discrete and sparse), models compare their embeddings to assess semantic similarity, perform clustering, or feed into downstream prediction tasks.

Role in NLP Applications: Because they distill text into numerical form, embeddings power a wide range of NLP tasks and applications. They have been used for text classification (turning each document into a feature vector for a classifier) (⇒), clustering (grouping documents by topic) (⇒), sentiment analysis, information retrieval (search engines and question answering) (⇒), recommendation systems, and more (⇒). With the rise of large language model (LLM) applications, high-quality embeddings are even more critical – for example, retrieval-augmented generation uses embeddings to search a knowledge base for relevant documents to feed into an LLM for question answering (⇒). In summary, text embeddings have become a foundational technology in NLP, enabling efficient semantic search, matching, and reasoning across massive text corpora (⇒). Their development has evolved through several distinct phases, as we will explore next.

2: Overview of the Four Eras of Text Embeddings

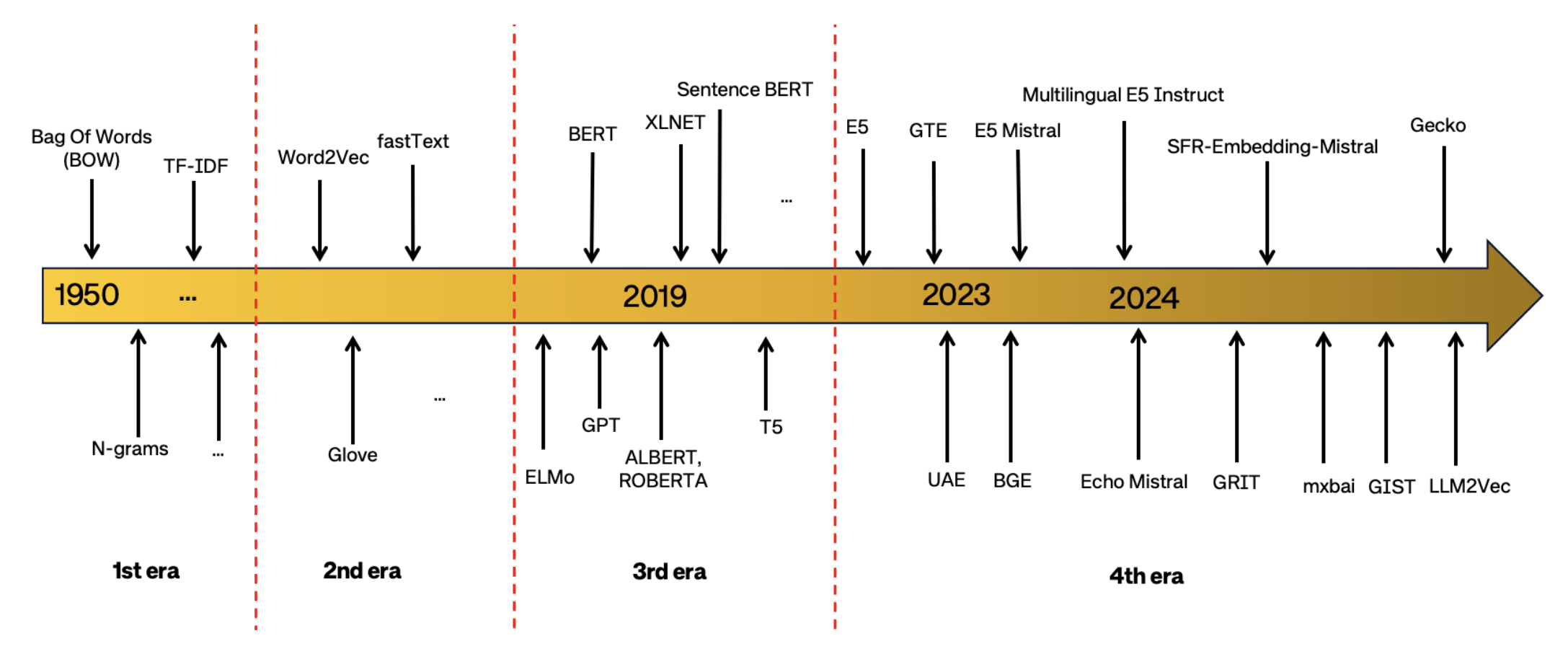

Over the past decades, text representation techniques have progressed through four major eras, each marked by a significant shift in how textual meaning is captured (Figure 1). These eras reflect the field’s trajectory from simple word-count features to sophisticated universal embeddings that generalize across tasks (⇒). Figure 1 highlights this progression of embedding methodologies, from the earliest count-based approaches to today’s universal models (⇒). Each transition was driven by limitations in the previous generation and by breakthroughs that enabled richer encoding of meaning.

2.1: 1st Era – Early Text Representations

In the beginning, text was represented by count-based features such as Bag-of-Words and TF‑IDF. These aren’t learned embeddings in the modern sense, but they set the stage by representing text as high-dimensional vectors of word counts or weighted frequencies (⇒) (⇒). However, they treated words independently and did not capture semantic context or meaning – “bank” would have the same representation in river bank and bank deposit. This limitation motivated a shift to distributed representations.

2.2: 2nd Era – Distributed Word Embeddings

The first true embedding models learned dense vector representations for words by leveraging context in large corpora. This era addressed the need to capture semantic similarity and relations between words beyond raw counts. It enabled generalization – words appearing in similar contexts ended up with similar vectors, aligning with the linguistic intuition that “you shall know a word by the company it keeps.”

2.3: 3rd Era – Contextual Word Embeddings

Researchers recognized that even word embeddings were limited because they were static (each word has one vector, regardless of context). The next era introduced context-sensitive models, allowing the same word to have different embeddings depending on its surrounding words. This shift greatly improved the fidelity of meaning representation, handling polysemy (e.g. “bank” in different contexts) by producing dynamic embeddings that change with context (⇒).

2.4: 4th Era – Universal Text Embeddings

Most recently, the field has moved toward training universal embedding models that aim to be effective for any text input (words, sentences, or documents) and any task. This era was driven by the need for general-purpose representations that transfer across domains and tasks (⇒). Breakthroughs in data scale, model architectures, and multi-task learning enabled models that can produce a single text embedding useful for diverse applications (from retrieval to clustering to classification) without task-specific fine-tuning (⇒). This represents a paradigm shift: instead of building a new embedding for each task, one model serves as a universal encoder. We’ll delve into each era in detail below, examining the representative methods, their innovations, and the new capabilities they unlocked.

Figure 1 shows the four different eras of text embeddings, from early count-based vectors to universal multi-task models (⇒).* Each era built on the last, overcoming previous shortcomings and expanding what embedding models can do.

3: The First Era: Early Distributed Representations (Word Embeddings)

The first era of learned text embeddings began with distributed word representations – dense vectors that capture a word’s meaning by training on large text corpora. Pioneering models like Word2Vec, GloVe, and fastText in the early-to-mid 2010s exemplify this approach (⇒). These methods marked a significant step forward from count-based features, because they could learn semantic relationships in an unsupervised manner and represent those in a continuous vector space (⇒).

Notable Models: Word2Vec (Mikolov et al., 2013) introduced two efficient neural training schemes for word embeddings: Continuous Bag-of-Words (CBOW) and Skip-gram. In CBOW, the model learns to predict a target word from its context words; in Skip-gram, it does the inverse, predicting surrounding words given a target (⇒). By doing so on billions of words, Word2Vec learns vectors such that words appearing in similar contexts (like “king” and “queen,” or “Paris” and “London”) end up nearby in the vector space. GloVe (Pennington et al., 2014) took a slightly different approach: rather than local context windows, it leveraged global corpus statistics (co-occurrence counts) to train embeddings, effectively factorizing a word-context matrix to yield word vectors (⇒). This helped capture both local and global semantic relationships. fastText (Bojanowski et al., 2017) further improved on Word2Vec by accounting for subword information (⇒). Instead of treating each word as an atomic unit, fastText broke words into character n-grams and learned representations for those. This allowed the model to generate embeddings for unseen words (by composing the n-gram vectors) and to capture morphological nuances – for example, “running”, “ran”, and “runner” share subword patterns and thus have related vectors.

Key Characteristics: These early embedding models produce a single static vector per word type. The vector captures an average sense of the word from all its usage in the training corpus (⇒). This was powerful: such embeddings encode latent dimensions of meaning (e.g., gender, royalty for king/queen) and have been shown to capture analogies (King – Man + Woman ≈ Queen in the vector arithmetic). They significantly advanced NLP by providing general-purpose word features that could be plugged into downstream models. For instance, using Word2Vec or GloVe embeddings as input features improved performance on tasks like named entity recognition, sentiment analysis, and machine translation, compared to one-hot or frequency-based features.

Limitations: A major limitation is the lack of context sensitivity. The embedding for a word like “bank” is the same in “bank account” and “river bank”, so it cannot fully disambiguate meaning from context. Likewise, static embeddings don’t naturally handle phrases or sentences – to get a representation for a sentence, one had to average or sum the word vectors, which loses word order and nuance. Despite these drawbacks, era-1 embeddings had enormous practical impact. They became standard tools in industry and academia, used to initialize neural networks or as features in non-neural classifiers. They also spurred analysis of the vector spaces, revealing that simple training objectives can encode surprisingly rich linguistic structure (⇒). This era laid the groundwork, but the quest for capturing context and deeper meaning led to the next breakthrough.

4: The Second Era: Contextual Word Representations

While static word embeddings improved upon raw counts, they still fell short in handling context-dependent meaning. The second era introduced contextual word representations – models that produce different embeddings for a word depending on its surrounding context. Instead of each word having one fixed vector, the vector is dynamically computed from the entire sentence (or larger context) in which the word appears (⇒). This innovation was enabled by advances in neural language models, particularly recurrent networks and Transformers trained on language modeling tasks.

ELMo – Embeddings from Language Models (2018): ELMo was a seminal model that demonstrated the power of deep, contextual embeddings. It used a bidirectional LSTM (a recurrent neural network) to encode text, concatenating left-to-right and right-to-left pass representations for each word (⇒). Crucially, ELMo is trained with a language modeling objective (predicting the next word, etc.), so the internal LSTM states become sensitive to the word’s context. The result is that a word’s ELMo embedding will differ in different sentences, effectively capturing polysemy. Peters et al. showed that plugging ELMo embeddings into various task models (question answering, sentiment, coreference resolution, etc.) yielded significant performance gains over using static embeddings, because the contextual nuance was preserved in the input (⇒).

OpenAI GPT (2018): Shortly after, the first Generative Pre-trained Transformer (GPT) model applied the Transformer architecture (Vaswani et al., 2017) to learn contextual embeddings. GPT is a unidirectional Transformer (it reads text left-to-right) trained on a massive corpus with an unsupervised objective (predicting the next word) (⇒). This model demonstrated that the attention mechanism in Transformers can capture long-range dependencies and richer context than RNNs, yielding powerful text representations. Moreover, GPT introduced the idea of pre-training on unlabeled text and then fine-tuning on a specific task, inaugurating the era of transfer learning in NLP (⇒). Its contextual embeddings were shown to transfer better to new tasks compared to LSTM-based ones (⇒), likely due to the Transformer's superior capacity to model language.

BERT – Bidirectional Encoder Representations from Transformers (2018): BERT took contextual embeddings to the next level by using a bidirectional Transformer and novel training objectives (⇒). Instead of reading only left-to-right, BERT’s encoder reads both directions, allowing context on both sides of a word to influence its embedding. BERT is trained on masked language modeling (predicting hidden words) and next sentence prediction tasks (⇒), which encourage the model to build a deep understanding of language semantics and coherence. The result is a powerful encoder that produces contextually-rich word (and sentence) embeddings. Upon its release, BERT achieved state-of-the-art results on a wide array of NLP benchmarks, from question answering to natural language inference, by fine-tuning the pretrained model on those tasks. This showed how general its contextual embeddings were – they contained enough information to be adapted to many challenges.

Impact: Contextual embeddings resolved the polysemy issue: the vector for a word now reflects the specific meaning in that sentence. They also capture subtle syntax and semantic relationships at the sentence level. For example, BERT’s embeddings encode the difference in meaning between “the bank raised interest rates” and “the bank was flooded by water” – something Word2Vec could not do. In real-world applications, this meant much better performance for systems that need true language understanding. A notable example is search engines: Google announced in 2019 that it had applied BERT models to its search ranking, helping it understand 10% of English queries more effectively by considering the full context of words like “to” and “for” in queries (⇒). This contextual understanding led to more relevant search results. Likewise, virtual assistants and question-answering systems became far more accurate using BERT-style encoders to comprehend user queries. The second era thus unlocked a new level of meaning representation, setting the stage for models that operate not just at the word level, but at the level of whole sentences and beyond.

5: The Third Era: Sentence-Level Representation Learning

With contextual word models like BERT, the community next turned to deriving fixed-length sentence embeddings – vectors that represent an entire sentence or paragraph. While one could obtain sentence embeddings by pooling BERT’s word embeddings (e.g., averaging or using the [CLS] token output), early experiments found this often wasn’t optimal for tasks like semantic similarity. The third era saw the development of models explicitly trained to produce high-quality sentence-level representations, bridging the gap between word-level context and sentence or paragraph understanding.

Sentence-BERT (2019): One of the landmark approaches was Sentence-BERT (SBERT) (⇒). SBERT builds on the BERT encoder but fine-tunes it in a siamese or twin-network setup on pairs of sentences. Using a similarity objective (e.g., making the embeddings of sentence pairs closer if they are paraphrases or entailments), SBERT learns to produce sentence embeddings that are directly useful for semantic similarity comparison (⇒). This was important because out-of-the-box BERT embeddings, when compared via cosine similarity, did not correlate well with human similarity judgments – SBERT’s fine-tuning fixed that issue. The result was a model that could generate an embedding for any sentence such that similar sentences map to nearby points. This dramatically improved tasks like semantic textual similarity (STS), duplicate question detection, and clustering of sentences by topic, all while being much faster than using a cross-attention model for every pair (SBERT can encode each sentence once and reuse the embeddings for any comparison).

Universal Sentence Encoder (2018): Around the same time, researchers at Google released the Universal Sentence Encoder (USE) (⇒). USE was trained on a variety of unsupervised and supervised tasks (including conversation response prediction and SNLI natural language inference data) to encourage a universal sentence-level vector that works for many purposes. It came in two flavors: one based on a Transformer encoder and one on a deep averaging network, both producing 512-dimensional sentence embeddings. The “universal” in its name signified that it was intended as a general-purpose sentence representation – usable for tasks like sentiment classification, question similarity, and clustering without needing task-specific training. Indeed, USE embeddings were shown to perform well across a range of transfer tasks out of the box.

Other Notable Models: Several other approaches enriched this era. For example, InferSent (2017) used supervised NLI data to train a sentence encoder (based on BiLSTMs) for general use, an early hint that multi-task learning can yield universal features. QuickThought (2018) trained to predict neighboring sentences in a text as a way to learn sentence embeddings. And more recent models like Sentence-T5 (2021) extended the idea of applying powerful text-to-text Transformers (like T5) specifically for producing sentence embeddings at scale ([⇒).

Applications: Sentence-level embeddings unlocked efficient semantic search and matching at the sentence or paragraph scale. For instance, using SBERT or USE, one can build a semantic search engine that, given a query sentence, retrieves relevant FAQs or knowledge base articles by embedding the query and documents and nearest-neighbor searching in the vector space. This is far faster than comparing the query to every document with a cross-attention model. Companies began deploying such semantic search for support tickets, documents, and web search queries. Another application is clustering: with good sentence embeddings, one can cluster a large collection of sentences (e.g., news headlines or customer reviews) to discover themes without needing manual labeling. Likewise, recommendation systems and deduplication tasks benefit: for example, finding similar questions in a forum (to merge duplicates) or recommending similar products based on review text. Overall, the third era was about making larger text units (beyond single words) first-class citizens in the embedding space. However, many of these models, while more general than word embeddings, were still typically tuned or evaluated on a specific subset of tasks (e.g., STS and NLI for SBERT). This set the stage for the fourth era, which strives for truly universal embeddings across all kinds of tasks and domains.

6: The Fourth Era: Universal Text Embeddings

The fourth era represents the current frontier: universal text embedding models that aim to be broadly effective for any text input and any NLP task. In other words, rather than specializing in one application (like only semantic similarity or only classification), a universal embedding model seeks to produce representations that are consistently useful across a wide spectrum of tasks, domains, languages, and even input lengths (⇒). This is a natural extension of the trends in era three, pushed further by new techniques and the availability of massive training resources.

What Makes Them “Universal”: A universal text embedding (also called a general-purpose embedding) is defined as a single model that can handle diverse tasks – it’s not just good at, say, semantic search, but also helps in classification, clustering, sentence similarity, reranking, summarization, and so on (⇒). Ideally, such an embedding would mimic human understanding in the sense that it captures the key features of text that are relevant to any task a human might do (⇒). Recent work has further expanded this concept to multi-lingual settings (one model for many languages) and even multi-modal or cross-domain inputs (⇒). In our context, we focus on text-only, but across many tasks. The push for universality addresses a practical need: previously, one might need to choose or fine-tune different embedding models for different tasks (one for retrieval, another for classification), but a universal model promises a one-stop solution that saves effort and performs robustly overall.

Catalysts for This Era: Several developments converged to make universal embeddings feasible:

-

Massive & Diverse Training Data: Efforts to collect or generate large-scale datasets covering many tasks have paid off. Instead of training on a single-task dataset, modern models train on dozens of datasets spanning retrieval, question answering, paraphrase, translation, etc. This exposes them to many ways language can be used, forcing the embeddings to capture more general features. For example, the availability of datasets like Natural Questions, MS MARCO (for QA retrieval), PAWS (for paraphrase), multiple classification benchmarks, and more allowed composite training regimes. In addition, high-quality synthetic data generated by LLMs became a game-changer (⇒) (⇒). Researchers have used large models (like GPT-3/4) to create pseudo training examples for tasks where data was limited – for instance, generating question–answer pairs for training a retrieval model. This dramatically increased the quantity and diversity of training signals (⇒).

-

Advanced Training Techniques: New training objectives and strategies were developed to encourage generalization. Multi-task learning frameworks can alternate between tasks or use clever sampling to balance tasks so that the model does not overfit to one at the expense of others. Contrastive learning has been extended (e.g., using better negative sampling or combining it with classification objectives) to yield embeddings that work for both similarity and decision tasks. Some works introduce an instruction tuning component – providing the model with a task descriptor (like “This is a search query:” vs “This is a summary:”) along with the text, to nudge the embedding to emphasize different aspects of meaning as needed. Overall, the loss functions and training setups in this era are often more complex, combining multiple objectives (a practice sometimes called composed fine-tuning or multi-stage training).

-

LLM Backbone and Knowledge Transfer: Importantly, many universal embedding models leverage Large Language Models (LLMs) either as initialization or teacher models (⇒). Using an LLM’s architecture (like a pretrained Transformer with billions of parameters) as the foundation can give the embedding model a head start in linguistic knowledge. Some approaches distill knowledge from an LLM into a smaller embedding model – for example, using the LLM to label or generate training examples, or even directly distilling its embedding outputs (⇒). We’ll discuss this in more detail shortly, but it’s a key aspect: the fourth era doesn’t shy away from using the power of LLMs to achieve its goals.

Representative Models: A number of new models exemplify the universal embedding trend, many of them topping leaderboards on the recently introduced MTEB benchmark (more on MTEB below). For instance, GTE (“General Text Embeddings”) by Li et al. (2023) introduced a multi-stage contrastive learning scheme across tasks to learn general embeddings (⇒). BGE (“BAAI General Embedding”) from Beijing Academy of AI is a family of models, including a recent BGE M3 that is multilingual, multi-task, and even handles varying text lengths through self-distillation techniques (teacher signals from different retrieval modes) to enhance versatility (⇒) (⇒). Another model, E5 (Wang et al., 2022/2023), is a series of embeddings pretrained in a weakly supervised manner on large web data and fine-tuned on many tasks – including a multilingual version – yielding state-of-the-art results on retrieval and classification tasks (⇒). Researchers at AI2 proposed Gecko, which distills the knowledge of a large instruction-tuned LLM into a smaller dual-encoder, producing “versatile text embeddings” that perform well on diverse tasks (⇒). Finally, approaches like LLM2Vec have shown that large language models themselves can be used (or slightly adapted) to produce powerful embeddings, hinting that much of the needed information is inherently present in LLMs’ representations (⇒). These models and others in the fourth era are typically evaluated on how broadly they can perform, rather than just on a single benchmark.

Role of MTEB: The Massive Text Embedding Benchmark (MTEB) has been instrumental in this era as both a challenge and a progress tracker. MTEB is a comprehensive evaluation suite introduced to measure embedding model performance across a wide range of tasks and languages (⇒). It spans 8 task types (including clustering, classification, retrieval, reranking, semantic similarity, etc.) and covers 50+ datasets in over 100 languages (⇒). The introduction of MTEB made it possible to quantify “universality” – a truly universal embedding model should perform strongly on all sections of MTEB. Initially, results from MTEB showed that no single model dominated every task (⇒), underscoring the challenge and motivating researchers to create better general-purpose models. Now, when a new universal embedding model is proposed, its success is largely measured by improvements on the MTEB leaderboard, demonstrating gains across the board (not just on one niche). In short, MTEB provided a target for the fourth era and an objective way to validate that a model is achieving broad coverage of semantic tasks (⇒) (⇒).

In summary, the fourth era is distinct in aiming for one model to rule them all in the realm of text embeddings. This paradigm shift has been enabled by rich data, novel training schemes, and leveraging LLMs. Next, we discuss some of the specific advances and techniques that have emerged in pursuit of these universal models.

7: Advances in the Fourth Era: Large-Scale Pretrained Models

The drive for universal embeddings has yielded a variety of innovative techniques. Many of the top-performing models in this era share common strategies, centered around scale and effective use of data and pretraining. Here we highlight key advances in training and model design that underpin the fourth era’s success:

7.1: Multi-Task and Instruction-Fused Training

Rather than training on a single objective, modern embedding models are trained on multiple tasks or data sources simultaneously. For example, a single model might be trained to handle retrieval (finding relevant passages for a query), textual entailment (does sentence A imply sentence B?), paraphrase identification, and topic classification all at once. This exposes the model to a wide range of linguistic phenomena. A related idea is instruction tuning for embeddings: models like InstructEmbeddings or those by Asai et al. (2022) use task instructions to inform the embedding process. They prepend an instruction (e.g., “Represent the query:” vs “Represent the document:”) to inputs during training (⇒). This helps a single model differentiate between use-cases (a query might emphasize different aspects than a document). By learning from many tasks with instructions, the model can better generalize to new tasks or domains (sometimes called being task-aware (⇒)). The result is a more flexible embedding that can, for instance, both cluster documents and retrieve answers with high performance.

7.2: Improved Loss Functions and Hard Negative Mining

Another advance is in how models are trained at the loss level. Early contrastive learning for embeddings treated all negative examples equally, but newer methods use hard negative mining – selecting particularly challenging negatives (e.g., a sentence that is topically similar but not actually a match) to make the model learn fine-grained distinctions. There are also tailored loss functions; for instance, Multi-stage contrastive learning (used in GTE) first trains on easier objectives and then on harder ones (⇒), or label-aware contrastive loss which incorporates class label information for classification-oriented embeddings. These improvements ensure the resulting embeddings are not just generally close for related texts, but also aligned with the requirements of specific tasks (e.g., keeping different classes distinguishable for classification). In effect, the embedding space becomes more structured to reflect various notions of similarity and difference relevant to human judgments.

7.3: Leveraging LLMs as Teachers or Backbones

A hallmark of recent advances is the integration of large language models in the embedding learning process. We can identify two patterns: (a) using LLMs to generate training data, and (b) using LLMs as the base model or teacher. In the first case, works have used LLMs like GPT-4 to create synthetic pairs for training. For example, an LLM might generate a question and an answer passage (positive pair) and perhaps a slightly related but incorrect passage (hard negative), which are then used to train a bi-encoder for retrieval. This LLM-augmented data approach massively expands training corpora with high-quality examples that cover corner cases, ultimately improving the learned embeddings’ robustness (⇒). In the second case, models like Gecko and LLM2Vec explicitly start with an LLM’s knowledge. Gecko distills a large model’s embedding ability into a smaller model by training the smaller model to mimic the large model’s outputs on many examples (⇒). LLM2Vec showed that even without explicit distillation, the hidden layers of a large model (or slight adaptations of it) can produce excellent embeddings (⇒). Additionally, some researchers fine-tune LLMs (like GPT-style models) specifically for embedding tasks, essentially turning a generative model into an encoder. All these tactics use the generalization power of LLMs to boost embedding quality, addressing weaknesses of earlier approaches.

7.4: Scale of Model and Data

“Large-scale” in this era refers both to data and model size. Models are now trained on billions of words of text across diverse sources, sometimes using data lakes that include web pages, dialogues, scientific text, etc. Moreover, architectures with hundreds of millions of parameters (or more) are employed, often initialized from a pretrained LLM checkpoint. The combination of large scale and pretraining means the models start with a vast amount of linguistic and factual knowledge, which they can then refine into a focused embedding function through fine-tuning. This has led to strong performance gains on benchmarks. A recent survey notes that these state-of-the-art methods have made “significant improvements and innovations in terms of training data quantity, quality and diversity; synthetic data generation for universal text embeddings; as well as using large language models as backbones” (⇒). Consequently, the overall performance on the MTEB benchmark – especially on retrieval, reranking, clustering, and pairwise classification tasks – has been remarkably improved by models employing such advances (⇒).

It’s worth noting that many top models combine multiple advances. As an illustration, Figure 2 highlights representative state-of-the-art universal embedding models and their main contributions (⇒). We can see that most models incorporate more than one innovation (for example, a model might use both diverse multi-task data and an LLM-based initialization). To categorize broadly: some models are data-focused (leveraging diverse or synthetic data), some are method-focused (innovating on loss functions or architectures), and some are LLM-focused (using large models as part of the solution) (⇒). These are complementary approaches, and the best results often come from combining them. In the next section, we delve a bit deeper into the specific intersection of LLMs and embedding models, as this has become a particularly rich area of development.

8: Subset of the Fourth Era: LLM-Based Text Embeddings

A “subset” of the fourth era worth examining on its own is the class of approaches where Large Language Models (LLMs) and text embeddings intersect. As described above, LLMs can enhance embedding models in multiple ways. A recent comprehensive survey (Nie et al., 2024) categorizes the interplay between LLMs and text embeddings into three main themes (⇒):

-

LLM-Augmented Text Embedding: In this scenario, LLMs act as auxiliaries to traditional embedding training (⇒). They can generate additional training data or provide feedback signals that help train a smaller embedding model. For example, an LLM might be prompted to paraphrase a sentence or generate semantically similar/dissimilar examples, which the embedding model then uses to learn a better similarity function. Another approach is knowledge distillation: an LLM’s outputs (or internal representations) on certain data are used as a “soft label” for training a simpler model (⇒). By augmenting the embedding training pipeline with the power of LLMs, we can overcome data sparsity and inject world knowledge that the smaller model wouldn’t otherwise have. This has been used to create high-quality datasets for tasks like retrieval – e.g., generating questions and answers (as mentioned) or creating challenging negatives that sharpen the model’s decision boundary (⇒).

-

LLMs as Text Embedders: This involves using the LLM itself (usually with some adaptation) to produce embeddings (⇒). Large models like GPT-3 are primarily designed for generating text, not necessarily for outputting a single vector. However, you can obtain embeddings from them by taking the hidden state of a particular layer or the output of a special token. OpenAI’s embedding API, for instance, is essentially a GPT-3-derived model truncated to produce a 1536-dimensional vector instead of full text output. The survey notes utilizing LLMs’ innate capabilities for embedding generation (⇒) – since these models have absorbed a lot of linguistic structure and factual information during training, their representations of text can be very semantically rich. Techniques in this category include prompting an LLM in a certain way that its next-token predictions correlate with semantic similarity, or fine-tuning an LLM with a contrastive objective so that its encoder part produces a useful embedding. The advantage here is that LLMs might capture nuances that smaller models miss. The disadvantage is that LLMs are huge and expensive to run. Therefore, a lot of current research tries to get “the best of both worlds” by compressing LLM embeddings into smaller models (which is essentially what Gecko and LLM2Vec aim to do). Nonetheless, some cutting-edge applications simply leverage an LLM directly for embeddings when accuracy is paramount and cost is no issue.

-

Embedding Understanding with LLMs: This is a newer, more exploratory theme. Here LLMs are used to analyze or interpret embedding spaces (⇒). One example might be using an LLM to generate a natural language description of what a particular embedding vector represents – essentially translating a point in embedding space back to words. Another example: using an LLM to evaluate whether an embedding has captured certain information (“Does this embedding likely encode the sentiment of the sentence?”) by probing it in various ways. Since LLMs have strong reasoning abilities, they could help identify patterns or shortcomings in learned embeddings. For instance, an LLM could be fed a set of words that are nearest neighbors in the embedding space and asked to figure out the common theme, thereby revealing what dimension or feature the embedding model is focusing on. This is more of an analysis tool than a direct improvement to embeddings, but it contributes to understanding and subsequently improving embedding models.

Combining these, LLM-based approaches extend the capabilities of text embedding models by injecting them with the knowledge and generalization ability of large models. Large models can capture subtle semantics, world knowledge, and even reasoning patterns that smaller models trained on task data might not. By augmenting embeddings with LLMs (either via data or as direct encoders), we get embeddings that are closer to human-like understanding. For example, if a smaller model struggled to see the connection between “Dr. King” and “Martin Luther King Jr.” because it never saw enough data linking them, an LLM – which likely knows that they are the same person – can provide that link either by generating a related sentence or by producing similar embeddings for both. Thus, LLMs help overcome knowledge gaps and bring robustness to embedding models.

A key contribution of Nie et al.’s survey is highlighting that while LLM-enhanced embeddings are powerful, they also introduce new challenges (⇒). Some pre-LLM issues persist (like domain gaps – an embedding model might still falter on biomedical text if not trained on it, even if it’s universal in other respects), and new issues arise (like the high computational cost of using LLMs, or ensuring that synthetic data from LLMs doesn’t inadvertently introduce biases or errors). Nonetheless, the trajectory is clear: LLMs and embedding models are increasingly intertwined, and future embedding techniques will likely continue this collaboration – using LLMs to train and explain embeddings, and using embeddings to extend the reach of LLMs to efficient semantic tasks.

9: Applications of Universal Text Embeddings

One of the reasons universal embeddings are so exciting is their wide applicability. By design, a universal embedding model can be plugged into many tasks with little or no task-specific training. Here are some of the key applications and use-cases where these embeddings shine:

-

Semantic Search and Information Retrieval: Perhaps the most impactful use is in search engines and retrieval systems. Universal sentence embeddings can encode user queries and documents into the same vector space, enabling efficient similarity search (via nearest neighbor lookup). This powers modern semantic search in a variety of settings – from web search, to legal document search, to enterprise knowledge base lookup. For example, question-answer retrieval systems rely on embedding the question and candidate answers so that the correct answer is closest in the vector space. Companies have integrated such embedding-based search into tools like chatbots (the bot finds relevant knowledge base articles by embedding the conversation and documents) and even into internet-scale search (e.g., Bing uses dense embeddings alongside traditional methods in its search pipeline). These systems benefit from universal embeddings because the same embedding model can handle a range of queries without needing retraining for each new topic.

-

Clustering and Topic Discovery: Embeddings enable organizing large collections of texts into meaningful clusters automatically (⇒). For instance, news articles can be embedded and then clustered to find groups covering the same story, even if they use different vocabulary. In business intelligence, companies embed customer feedback or support tickets and cluster them to discover recurrent themes or issues. The better and more universal the embedding, the more coherent these clusters are (since the model consistently captures semantic similarity). This is especially useful when dealing with mixed data that includes various topics – a universal model will not be thrown off by shifting from one domain to another in the dataset.

-

Text Classification and Analytics: While classification tasks can be done by fine-tuning models, one can also use universal embeddings as features for classification – especially when labeled data is limited. For example, to categorize a set of documents into topics, one can simply embed each document using a universal encoder and train a simple classifier (or even use nearest neighbors) on those embeddings. Because the embeddings already capture high-level meaning, even a linear classifier can perform well (⇒). This approach is used in industry for things like routing support tickets (embedding the text of the ticket to decide which department should handle it), content moderation (embedding a post to determine if it’s similar to known policy-violating content), or sentiment analysis (embedding a review and then mapping that to a sentiment score).

-

Pairwise Matching Tasks: Universal embeddings are very handy for tasks that involve determining the relationship between two pieces of text. Examples include detecting duplicates or paraphrases (do two descriptions refer to the same issue?), entailment (does sentence A imply sentence B?), and question-answer pairing (does this question match that answer or that FAQ entry?). In each case, the texts can be embedded and a simple similarity or distance metric applied. Many online forums and Q&A sites use this to suggest similar questions while you type a new question, for instance. The MTEB benchmark itself evaluates many of these under categories like pairwise classification and semantic textual similarity.

-

Multilingual Applications: Because some recent models are trained multi-lingually, a single embedding space can encompass multiple languages. This unlocks cross-lingual applications – e.g., retrieving documents in French that are relevant to an English query, by embedding both and comparing. Or clustering documents irrespective of language (grouping a Spanish and an English article if they talk about the same event). Companies with global user bases find this valuable for building unified systems without maintaining separate models per language.

-

Recommendation Systems: Text embeddings also play a role in recommendation when text is involved, such as content recommendation or matching users to content. For example, a job posting and a job seeker’s profile can be embedded into the same space to see if they are a good match. Or a piece of news and a user’s reading history (represented as an average of embeddings of articles they read) can be compared to recommend news. Universal embeddings help because they can encode any text description – job descriptions, resumes, product descriptions, user reviews – into a comparable form. Some e-commerce platforms embed product descriptions and user-generated text (reviews, queries) to improve search and recommendations, going beyond keyword matching to semantic matching.

-

Downstream NLP pipelines: Even when using large language models, embeddings play a supporting role. For example, retrieval-augmented generation (RAG) systems first retrieve relevant text via embeddings to feed into an LLM that will produce an answer or summary (⇒). Embeddings are also used to index and filter content (like finding all texts in a database that are outliers or that match a certain pattern as a first step before deeper analysis by an LLM or human). In real-time applications where speed is crucial, precomputed embeddings allow constant-time or sublinear lookups, which is why many production systems rely on them for the first pass of information gathering.

9.1: Industry Examples

Beyond the Google Search example with BERT, we have plenty of modern instances. Streaming services embed metadata and even textual descriptions of movies to recommend similar content to users (this handles the “cold start” problem where a new movie with few views can be recommended based on its embedding similarity to popular ones). Social media platforms might embed posts to detect near-duplicate spam or to organize content by themes. Companies like OpenAI have an embeddings API that developers use widely for building semantic search in applications – from personal note-taking apps (that let you semantically search your notes) to customer support (finding answers from documentation). Benchmark suites like MTEB also reflect real-world relevance by including tasks derived from Wikidata, StackExchange, news, etc., ensuring that models aren’t just tuned to academic datasets but have general utility (⇒).

In summary, universal embeddings are becoming the “Swiss Army knife” of NLP – a single tool (or model) that can be applied to numerous problems with minimal adaptation. This broad usefulness is exactly why the research community and industry have invested in making embeddings more universal and robust.

10: Evaluation and Benchmarks: The Role of MTEB

Evaluating embedding models, especially universal ones, requires looking at performance on a wide array of tasks. The Massive Text Embedding Benchmark (MTEB) has emerged as a pivotal benchmark for this purpose. Let’s discuss what MTEB entails and why it’s so significant:

10.1: What is MTEB?

MTEB is a benchmark introduced by Muennighoff et al. (2022) to provide a comprehensive evaluation of text embedding methods (⇒). It was motivated by the observation that previous evaluations were narrow – for example, an embedding might be tested only on semantic similarity tasks and one might assume it’s generally good (⇒). MTEB instead spans 8 distinct task types covering a variety of real-world uses (⇒). These task categories include:

- Bitext Mining: finding translation pairs across languages,

- Clustering: grouping texts by similarity without labels,

- Classification: using embeddings for supervised classification tasks,

- Pairwise Classification: making decisions about pairs of texts (like entailment or duplicate detection),

- Reranking: ordering a list of candidate results for a query,

- Retrieval: finding relevant documents for a given query,

- STS (Semantic Textual Similarity): scoring how similar two sentences are,

- Summarization Evaluation: using embeddings to judge the quality or relevance of summaries.

In total, MTEB initially included 58 datasets in 112 languages, making it not only task-diverse but also multilingual (⇒). It benchmarked 33 models in the original study, ranging from older methods like GloVe and USE to newer ones like Sentence-BERT variants (⇒).

10.2: Significance of MTEB

The introduction of MTEB was a turning point because it exposed the strengths and weaknesses of various embeddings across many scenarios. One key finding was that no model dominated every task – for instance, a model might do well on retrieval but not as well on clustering (⇒). This suggested that the field “has yet to converge on a universal text embedding method” that is supreme on all tasks (⇒). In other words, there was room for improvement and a clear goal: to develop embeddings that perform consistently well across this diverse benchmark. MTEB effectively became the test for “universal” claims. If a model is truly universal, it should be near the top on each section of MTEB.

From a research perspective, MTEB encourages holistic modeling. Instead of optimizing for one metric, model creators now have to consider multiple facets of language understanding. It also prevents the scenario where a new embedding model is only compared on a couple of tasks of the authors’ choosing – now the community expects to see a full MTEB evaluation, which brings objectivity. The availability of an open leaderboard (hosted on HuggingFace) further spurred competition and rapid progress, as results are easily comparable.

10.3: How MTEB Evaluates Models

MTEB provides a unified framework to plug in an embedding model and test it on all tasks with minimal effort (⇒). For example, for classification tasks, it might use the embeddings as features for a simple classifier to see how well they separate classes. For retrieval tasks, it uses the embeddings in a nearest neighbor search and checks metrics like recall or nDCG. Because all models are evaluated with the same pipelines and metrics, the comparison is fair. This also reduces the burden on researchers – one doesn’t need to set up dozens of separate evaluations; MTEB’s toolkit automates it (just “add 10 lines of code” to evaluate any model, as the authors note (⇒)).

10.4: Impact on Recent Models

As noted earlier, the focus of our reviewed paper was precisely on the top-performing models on MTEB. Since MTEB’s release, new universal embedding models are often introduced alongside claims like “achieves state-of-the-art on MTEB” or “improves average score on MTEB by X%”. This benchmark has become to text embeddings what ImageNet is to image models – a standard yardstick. For example, the E5 model and the BGE model both demonstrated significant improvements on MTEB, especially on certain task categories like retrieval. The benchmark also highlights remaining weaknesses; the reviewed paper’s authors point out that despite great gains on retrieval and related tasks, there’s little improvement on summarization tasks even for current top models (⇒). This insight comes directly from seeing the breakdown of MTEB scores.

In summary, MTEB’s role is two-fold: it evaluates how universal a text embedding model really is, and it guides research by pinpointing where models struggle. By having a common ground for comparison, it has accelerated the field’s progress toward truly general-purpose embeddings (⇒) (⇒). Any NLP practitioner choosing an embedding model today can consult MTEB results to pick one that best suits their needs, and they can be confident that the model was vetted against a broad range of scenarios.

11: Challenges and Future Directions

Despite the rapid progress in text embeddings (especially with the universal models of the fourth era), several challenges and limitations remain. Researchers have identified these gaps and are actively exploring solutions. We will outline some of the key challenges and promising future directions:

11.1: Task and Domain Coverage Gaps

Even “universal” embeddings are not truly universal yet. One noted issue is that current models, while strong in many areas, make minimal improvement on certain tasks like summarization (⇒). This suggests that the way embeddings capture document-level meaning or summary-quality information is insufficient. Additionally, most models still heavily prioritize English and a few major languages. Many are trained on English data and only later extended to other languages. This lack of inherent multilinguality means if you apply them to, say, Swahili or Vietnamese text, they may be less effective. Similarly, domain diversity is a concern (⇒) – models might be very good on the domains seen during training (like Wikipedia or news text) but falter on niche domains (medical, legal, tweets, etc.). The current benchmarks themselves (including MTEB) have limited domain variety. As the reviewed paper highlights, there’s a need for benchmarks and training data that cover specialized fields such as finance, health, or scientific text to test and improve domain generalization (⇒).

11.2: Efficiency and Practicality

As models grow in size and training data, sustainability and cost become challenges (⇒). Training a gigantic embedding model on dozens of datasets is resource-intensive. Inference can also be costly if the model is large – embedding millions of sentences might be too slow or expensive for real-time applications. Future research is looking at making universal embeddings more efficient: this could involve model compression (distilling large models into smaller ones without losing much accuracy), approximate embedding techniques, or smarter use of resources (for example, adaptive inference that uses a larger model only when needed). There’s also interest in sustainable training, meaning methods that require less energy or can reuse pre-trained components to avoid training from scratch.

11.3: Better Use of Instructions and Context

As we incorporate instructions into embedding models, an open question is how to do it in the most generalizable way. For instance, how should an embedding model be prompted or conditioned for different tasks to maximize performance? Understanding how instructions influence the embedding space and how to systematically incorporate new instructions (for tasks not seen in training) is a frontier. The reviewed paper suggests more in-depth study of instructions’ impact on symmetric vs asymmetric tasks (symmetric tasks might be STS where the role of two texts is identical, asymmetric might be retrieval where one is query one is document) (⇒). Ensuring the model can generalize to tasks given only a natural language description (zero-shot) would be very powerful.

11.4: New Similarity Measures and Objectives

Almost all current embedding models rely on cosine or dot-product similarity in vector space to gauge closeness of meaning. However, human semantic judgment isn’t always perfectly symmetric or linear. For example, we often say “A is a good summary of B” but not vice versa – an asymmetry that cosine similarity can’t capture because it’s symmetric. The reviewed work hints at exploring novel (dis)similarity measures that can produce human-like asymmetric judgments (⇒). This could involve directional similarity or incorporating external knowledge of entailment. It’s a challenging research problem to define mathematical measures that align better with human perception for various tasks.

11.5: Handling Longer Texts and Compositionality

As of now, many embedding models work best on sentences or short paragraphs. Handling very long documents (or even multi-document inputs) is still not a solved problem – models either truncate, average sentence embeddings (losing structure), or use hierarchical approaches. Future embeddings might need to encode long texts (thousands of tokens) in a way that preserves the overall discourse and topic structure. This goes hand-in-hand with ideas from summarization and hierarchical modeling. Furthermore, there is interest in compositional embeddings – the ability to compose embeddings of parts to get an embedding of the whole (for example, embed paragraphs and combine them to get a document embedding). This is related to the multi-granularity challenge mentioned (from words to sentences to docs) (⇒).

11.6: Continual Learning and Adaptation

Languages and domains evolve. A static embedding model might become outdated (e.g., missing new slang or newly important concepts) or might need adaptation to a new domain. A future direction is embedding models that can continually learn from new data without forgetting old capabilities. There’s also interest in personalization – could an embedding model be fine-tuned lightly to better capture, say, a particular user’s vocabulary or a company’s internal jargon, while still remaining general otherwise?

11.7: Integration with Knowledge and Reasoning

Some researchers speculate about embeddings that not only capture surface-text similarity but also incorporate knowledge graphs or logical reasoning. For example, if we know from a knowledge base that “X is a subtype of Y,” perhaps the embeddings of X and Y should reflect that relationship in a more structured way. This blurs the line between pure distributional semantics (learning from text alone) and symbolic knowledge integration.

11.8: Evaluation Improvements

As new models push the envelope, evaluation methods must also evolve. We might see more comprehensive benchmarks (as suggested, covering more domains, more languages) (⇒). Also, evaluation might go beyond task performance to consider fairness, bias, and ethics: ensuring the embeddings don’t unduly cluster or separate text based on attributes like race or gender when not appropriate, or that they don’t propagate harmful biases present in training data. Another aspect is redundancy and efficiency in benchmarks – evaluating on dozens of datasets can be slow; researchers are looking into whether fewer datasets could predict performance on others, to streamline testing (⇒).

In summary, while universal embeddings have come far, they are not a solved problem. The fourth era has opened up new possibilities and also revealed new challenges. The path forward involves making embeddings even more inclusive (covering all languages, domains, tasks), efficient, and aligned with human understanding. The interplay with LLMs will likely continue – possibly leading to an era where the distinction between an “embedding model” and an “LLM” is blurred, or we have hybrids that leverage strengths of both. For NLP practitioners and researchers, the takeaway is that embeddings remain an active area: one should be mindful of their current limitations (e.g., use specialized models for specialized domains, if needed) but also keep an eye on rapid developments that might soon overcome these hurdles.

12: Conclusion and Summary

The evolution of text embedding models from the early days to the present fourth era represents a remarkable journey of innovation in NLP. We began with simple count-based representations (Bag-of-Words, TF-IDF) and moved to learned distributed word embeddings that captured semantic similarity in a dense vector form. This was the first major era of embeddings, epitomized by Word2Vec, GloVe, and fastText, which transformed NLP by providing a way to encode meaning numerically (⇒) (⇒). However, those early embeddings were limited by context insensitivity – a single vector per word cannot handle multiple meanings or subtle context differences (⇒).

The second era introduced contextual embeddings, with models like ELMo and BERT that revolutionized how we represent text (⇒) (⇒). These models demonstrated that embeddings can be dynamic, changing with context, thereby capturing the true meaning of words in each sentence. This era leveraged deep neural networks and large-scale pretraining, yielding representations that drove dramatic improvements on many NLP tasks (from QA to search). Contextual models became the new norm for high-performance NLP, and even search engines and commercial NLP services adopted them to better understand user queries and content (⇒).

Building on that, the third era focused on sentence-level representations, acknowledging that we often need a single vector for a whole piece of text. Methods like Sentence-BERT and Universal Sentence Encoder showed how to obtain meaningful sentence embeddings that retain the prowess of contextual models while being directly usable for similarity search, clustering, and more (⇒) (⇒). These embeddings enabled practical applications like semantic search and scalable semantic analysis across sentences and paragraphs.

Finally, we have entered the fourth era of universal text embeddings, which is a paradigm shift in its ambition. Instead of optimizing for one type of task, models now strive to be one-size-fits-all solutions, performing well on the entire landscape of language tasks (⇒). This era was made possible by massive data, multi-task training regimes, and by harnessing the power of LLMs (⇒). The resulting models (GTE, BGE, E5, Gecko, etc.) are raising the bar on what one can expect from an embedding – they inch closer to the ideal of a text representation that you can plug into any problem and get strong results. The introduction of the MTEB benchmark was a timely catalyst, allowing objective measurement of this “universality” and spurring rapid progress (⇒) (⇒).

Why is the fourth era a paradigm shift? Because it changes how we think about deploying NLP solutions. Instead of training a custom model for each new task, one can take a universal embedder and immediately apply it, often with just a simple classifier or a nearest-neighbor search on top. It abstracts a huge amount of linguistic and semantic knowledge into a reusable form. In practical terms, this means faster development cycles and more consistent performance across tasks. It also means that improvements in the universal models benefit a wide array of applications simultaneously. The fourth era models are not just incremental improvements; they represent a consolidation of NLP knowledge into general-purpose systems.

12.1: Key Takeaways

For NLP practitioners, it’s important to understand the capabilities and limits of these eras. Static word embeddings are fast and useful for some purposes but cannot handle context. Contextual embeddings are powerful, especially when fine-tuned, but might require task-specific adjustments. Sentence embeddings make semantic similarity scalable. And the latest universal embeddings offer an attractive all-in-one package, though one should keep an eye on their performance on the specific target domain and task (as truly universal performance is challenging). Moreover, LLMs and embedding models are converging – techniques from one are bleeding into the other, which hints that future language understanding systems might unify generation and embedding tasks more seamlessly.

In conclusion, text embeddings have come a long way: from counting words to capturing meaning in context, and now towards capturing meaning across tasks. The fourth era indeed represents a new chapter – a move towards general-purpose language understanding engines. As research continues, we can expect embeddings to become even more universal, bridging gaps of language and domain, and integrating ever more tightly with large language models. This journey through four eras underscores an overarching trend: moving towards representations that are closer to how humans understand language – flexible, context-aware, and general. Each era built the foundation for the next, and now standing in the fourth era, we have tools that were unimaginable a decade ago. For anyone working with text data, these developments mean better performance and fewer barriers to extracting insights from language. The field is poised for further breakthroughs, and it wouldn’t be surprising if a “fifth era” eventually emerges, perhaps characterized by truly unified models that combine the strengths of LLMs with efficient, universal representations. For now, we can celebrate the progress so far and enthusiastically watch (or contribute to) the ongoing advances in text embedding research (⇒) (⇒).