What is joint distillation? Why do we need it? ⚗️

'Knowledge distillation' (a.k.a. "the teacher-student framework") was introduced in 2015 and involved training a small 'student' network to predict the logits [i.e. softmax input of the final layer] of a large 'teacher' network, the reasoning being that these 'activations' are the essence of generalisation capacity sought.

I've always assumed the name “distillation” is a nod to the changes in the temperature hyperparameter involved in the softmax (the temperature is raised during training and returns to 1 in the student), but it's actually better to think of that as controlling the 'softness' of the labels.

This paper describes it as transferring the knowledge "implied" in the large network to the smaller one, a nice way of phrasing it.

A recent paper has questioned whether it "really works", and concluded that it amounts to a very difficult optimisation problem that is simply not always necessary to solve well in practice! This paper also criticised the emphasis on top-k prediction accuracy, which is not in itself the goal of distillation, but rather generalisation is.

Joint distillation is an update of this paradigm using mutual learning, so the student and teacher become 'peers'.

deep mutual learning (CVPR 2018) is proposed, where an ensemble of students learn collaboratively and teach each other throughout the training process. Actually, mutual learning can be viewed as an online knowledge distillation strategy

They combine this with 'self-distillation', introduced at ICCV 2019 under the memorable title of “Be Your Own Teacher” (💾):

The networks are firstly divided into several sections. Then the knowledge in the deeper portion of the networks is squeezed into the shallow ones.

- 📄 Zhang et al. (2019) Be Your Own Teacher: Improve the Performance of Convolutional Neural Networks via Self Distillation

They then throw meta-learning (so-called 'learning to learn') into the mix, ending up with "internal meta self-distillation and external mutual learning".

we mainly utilize meta-learning to generate the adaptive soft label for the intermediate SR supervision in the self-distillation process, with that it can further help to reduce the capacity gap between the deeper and the shallower layer.

An "adaptive soft label" is

We need it to generalise ...

Can I see a visual depiction of the super-resolution result achieved in this work? 👀

Figure 1 is the result they're most proud of, the 'headline' on page 1, and shows the progression from IMDN (presented at MM 2019 and won a challenge at the AIM workshop at ICCV 2019) through PISR (presented at ECCV 2020) to their model JDSR.

The predecessors can't manage to reconstruct the correct direction of the straight lines. IMDN just seems to 'guess' and makes up the wrong direction, and PISR seems to hedge and draw lines in both directions (it looks like a checkerboarding artifact common in CNNs).

On the 2nd row, note the detail of the horizontal line in the top left is reconstructed by JDSR but not its predecessors.

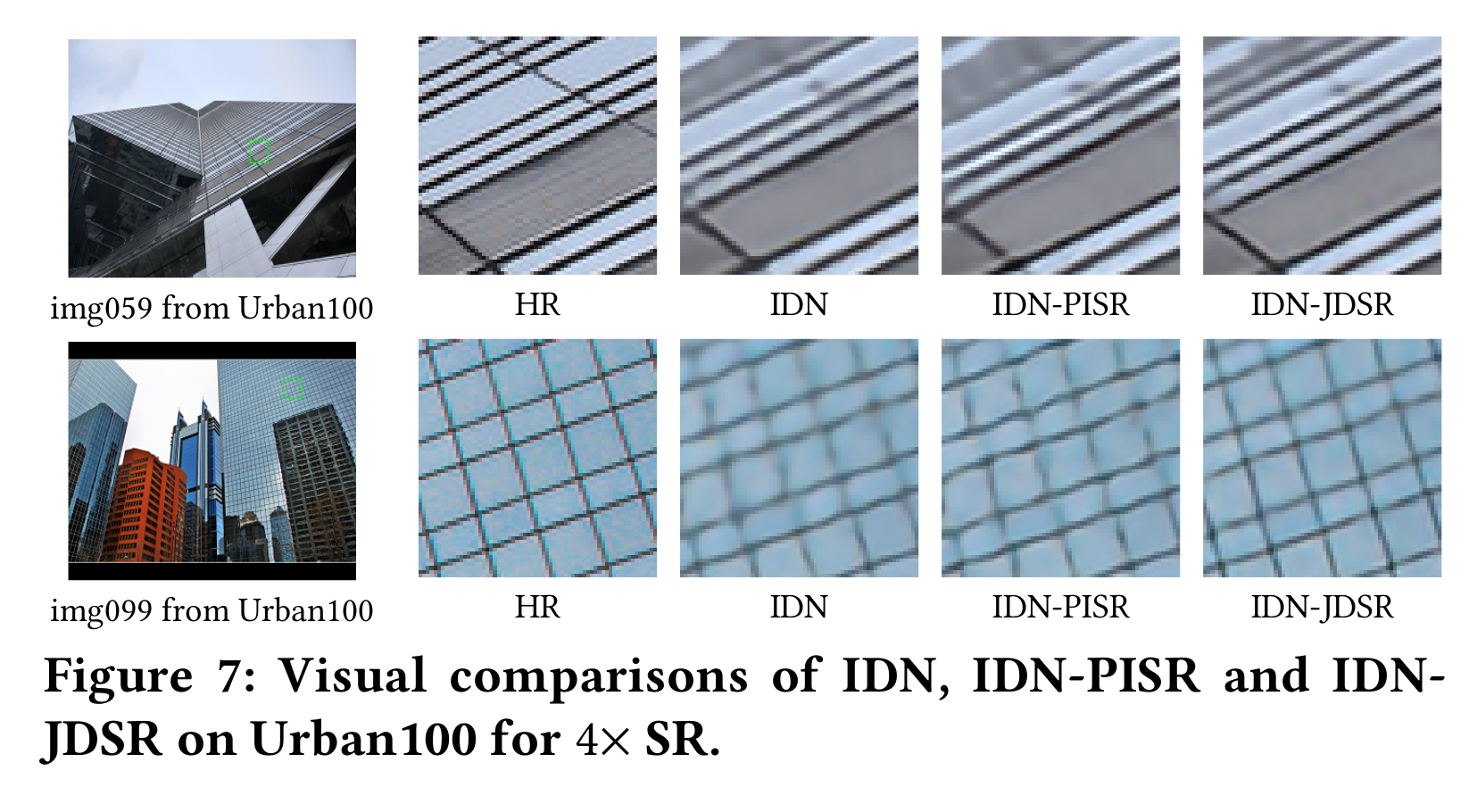

Figure 7 shows the preservation of straight lines. The other models compared against are previous iterations in a lineage of knowledge distillation approaches to SISR.

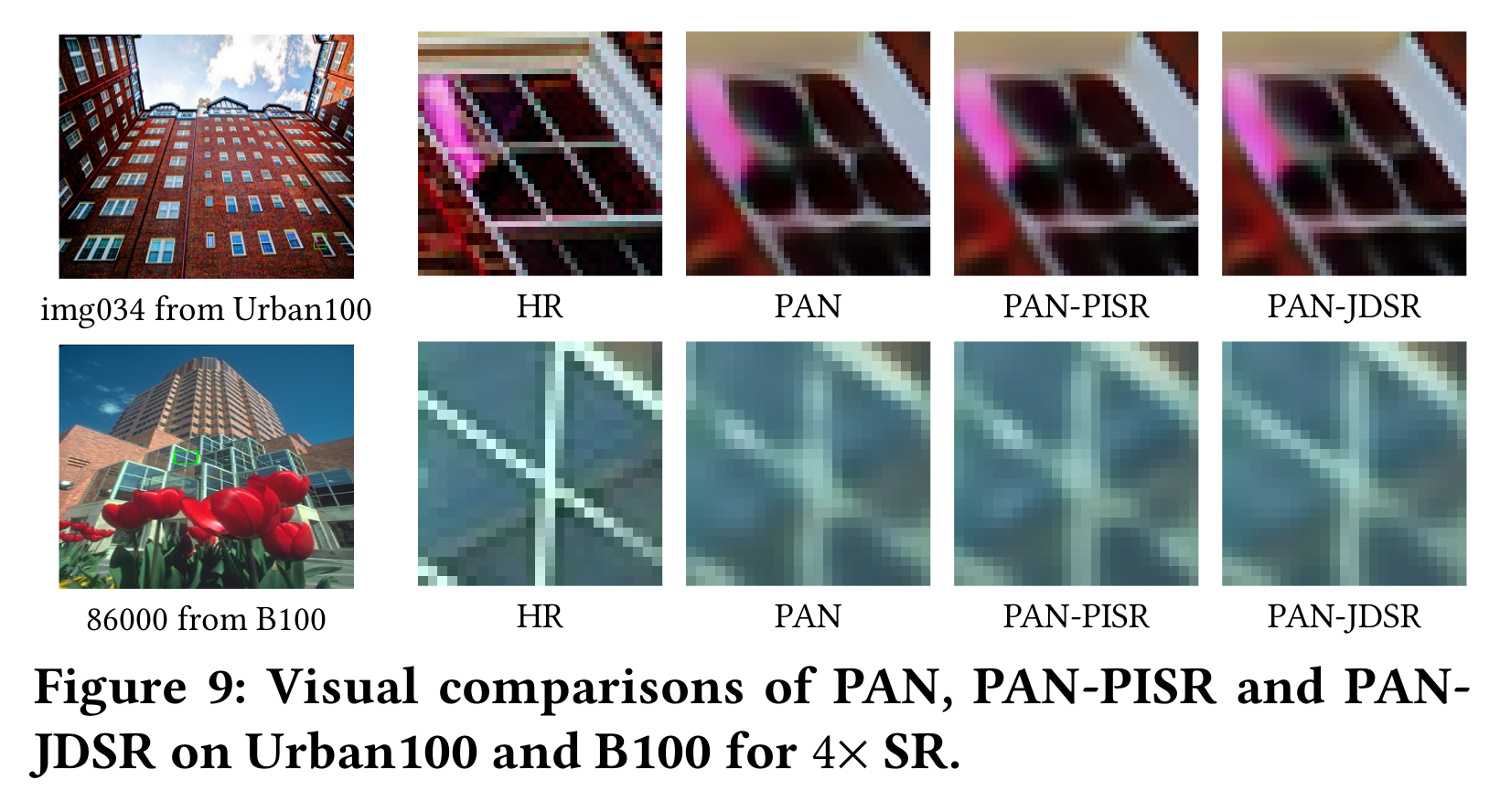

Figure 9 is the least impressive of the examples IMHO, the nice parallel lines of the window pane in the top row get smushed and rounded at the corners.

As well as the improved super-resolution output, there's also an improved bicubic-like shrinking procedure to look at.

A bit of standard terminology in this field is required to appreciate the latter. As well as 'single image super-resolution' (SISR), note these 3 acronyms:

- LR = low resolution, the downsampled [usually by a standard algorithm like bicubic]

- HR = high resolution, the ground truth high res. image,

- SR = super-resolution, the upsampled image which a machine learning model produces

- Rarely will it be close to the HR ground truth, but this gap can be measured by either 'quantitative' or 'visual' metrics of relative quality

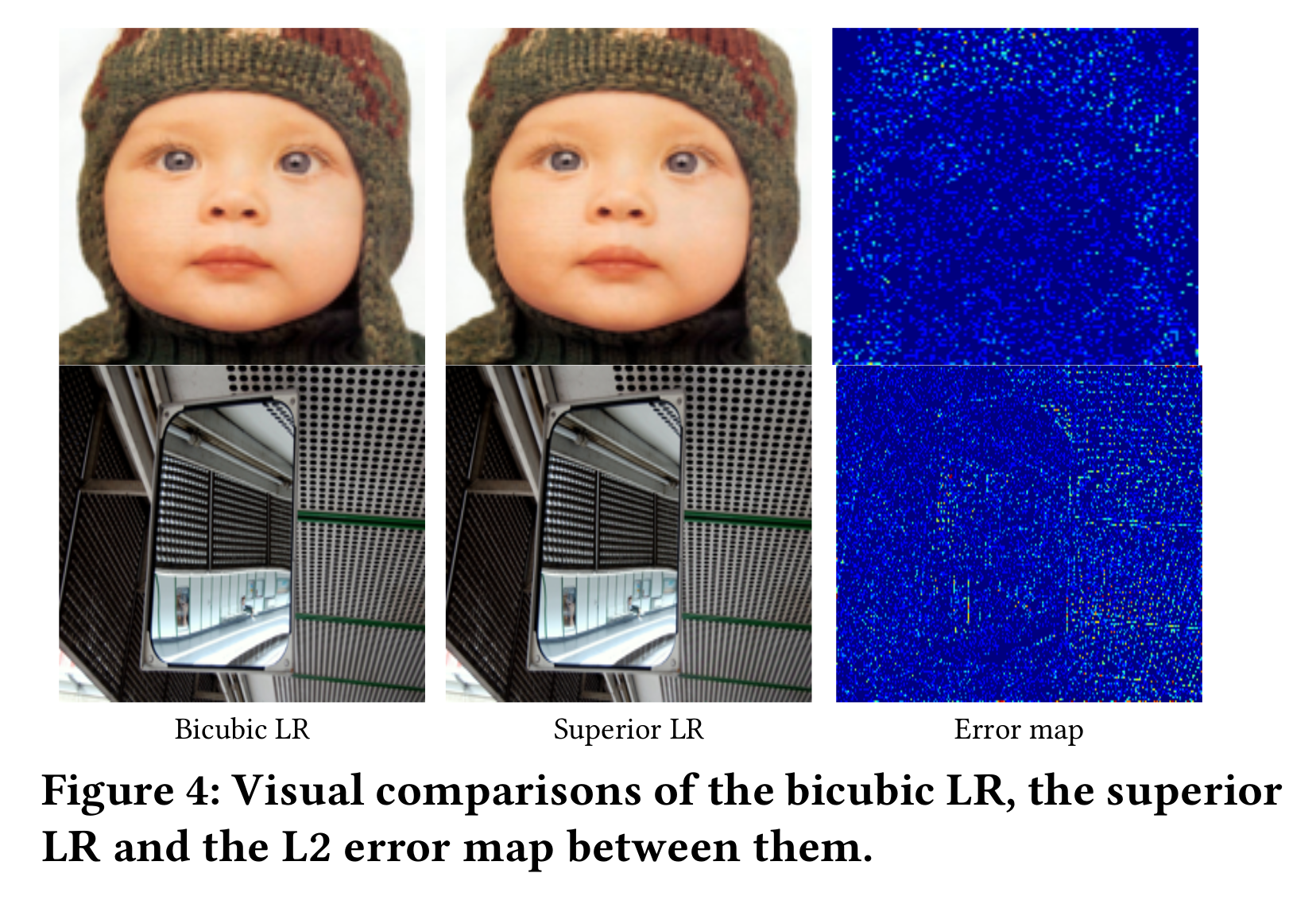

This paper introduces a new type of low-res. image it dubs 'superior LR'. This is different from a regular LR image as there's been some training done to learn a more realistic downsampling.

This is a familiar concept, sort of... Papers like TextZoom have criticised the use of synthetic training data for this task, and instead proposed a dataset of paired images taken with different focal lengths (the authors called it the 'authentic' way). It's generally accepted that bicubic downsampling artifacts aren't really representative of images 'in the wild', but Luo et al. here modify the algorithm to be a learnt one instead.

Learnt algorithms for compression have become pretty widespread in recent years — take Facebook's Zstd archive format for example.

Figure 4 here shows that they do indeed differ. There's no ablation study that demonstrates what happens if you don't use the 'superior LR' but if you overlay the two you can discern that some of the circular holes in the grid photo are slightly sharper (in particular along the right side next to the edge of the mirror, as also highlighted in the "heat map alongside)

Is this paper building on existing ideas? What specifically is new in it? 💡

The paper name checks a handful of earlier works (a lot of papers in this field take a pre-existing model and adapt it very slightly). It makes 2 main criticisms of the earlier models:

- "they all rely on an external model to provide auxiliary information"

- "the information flow of the traditional teacher-student network is asymmetric. The teacher cannot receive any feedback from the student"

They add that the pre-trained teacher networks are "cumbersome", but this is more a practical than theoretical complaint.

The terminology gets rejigged here, which makes sense: if self distillation is going on, then it no longer makes sense to speak of 'a teacher' and 'a student' (because what was the student is now its own teacher: as per the motto Be Your Own Teacher). Instead they speak of the 'internal' (formerly student) and 'external' (formerly teacher) models. The models are now both called "peers", so the teacher is "a large peer network" instead.

Which image super-resolution techniques do the authors name check? 📇

Here are some of the cited models with links to their papers and code:

-

introduced mutual-distillation blocks for extracting hierarchical features

-

📄 SRKD (ACCV 2018)

first introduced KD to image SR by constraining the intermediate statistical feature map between the teacher and student networks

- 📄 Gao et al. (2019) Image Super-Resolution Using Knowledge Distillation

-

proposed feature affinity-based distillation to transfer structural knowledge

- based on EDSR,

- 📄 He et al. (2020) FAKD: Feature-Affinity Based Knowledge Distillation for Efficient Image Super-Resolution

-

employed variational information distillation to transfer the privileged information from HR images to the student model.

- in its own words:

effectively boosts the performance of FSRCNN by exploiting a distillation framework, treating HR images as privileged information.

- 📄 Lee et al. (2020) Learning with Privileged Information for Efficient Image Super-Resolution (arX⠶2007.07524v1 [cs⠶CV])

- variational information distillation was introduced by Neil Lawrence's group (Amazon) at CVPR 2019

- 📄 Ahn et al. (2019) Variational Information Distillation for Knowledge Transfer

- The idea is to minimise a cross-entropy loss by variational expectation maximisation, because mutual information is computationally intractable

- The IM algorithm dates back to NeurIPS 2003

- variational information distillation was introduced by Neil Lawrence's group (Amazon) at CVPR 2019

- in its own words:

Where did the authors present their work? What else was on the programme? 📆

This was presented at the ACM's Multimedia 2021 conference (#681). There were several other super-resolution papers:

- 864 📄 Gupta et al. (2021) Ada-VSR: Adaptive Video Super-Resolution with Meta-Learning (arX⠶2108.02832v1 [eess⠶IV])

- 1681 📄 Zhao et al. (2021) Scene Text Image Super-Resolution via Parallelly Contextual Attention Network

- 2629 📄 Zhang et al. (2021) PFFN: Progressive Feature Fusion Network for Lightweight Image Super-Resolution

- 266 📄 Li et al. (2021) Information-Growth Attention Network for Image Super-Resolution

- 366 📄 Wang et al. (2021) Fully Quantized Image Super-Resolution Networks

What was the motivation behind it? 💼

The motivation seems to be for mobile 'on-device' upsampling, or maybe to send less data over a network and reconstruct it reliably. The applications would be pretty open-ended for the company who did this R&D at ByteDance (parent company of TikTok).