Self-playing Adversarial Language Game Enhances LLM Reasoning

📄 Cheng et al. (2025) Self-playing Adversarial Language Game Enhances LLM Reasoning

(arXiv:2404.10642v3 [cs.CL])

Part 2 of a series on the SPAG paper.

In this section, we cover:

- The Adversarial Taboo-style mechanics for hidden words

- Attacker vs. Defender roles and objectives

- State, action, and reward design under a zero-sum setup

In this section, the paper formalizes how a language game can be built and why it serves as a suitable environment for self-play. The authors propose an adversarial variant of the classic “Taboo” game, where players have conflicting objectives. This design establishes clear win/loss conditions, enabling a zero-sum competitive scenario that encourages the emergence of complex language strategies and improved reasoning.

2.1 Adversarial Language Games

The Rationale for Game-Based Training

Natural language is high-dimensional: any utterance can contain a broad mix of semantics, syntax, and contextual cues. Traditional supervised learning, where each utterance is labeled correct or incorrect, provides limited feedback and little impetus for creativity or strategic thinking. By contrast, adversarial language games can reward or penalize entire sequences of actions (conversational turns), mimicking the multi-step decision process that arises in real dialogues.

- Competitive Pressure: Each player (agent) has opposing goals, forcing the other to adapt. This mirrors some real-world scenarios in debate, negotiation, or other adversarial settings.

- Self-Contained Environment: The game can be played repeatedly by two copies of the same model (self-play). This reduces dependence on manually labeled examples and offers a potentially unbounded source of training data.

- Incremental Skill Acquisition: Over multiple rounds, each agent refines its strategy to overcome the other’s improving tactics—an iterative process that can drive more sophisticated forms of language-based reasoning.

Link to Existing Work in Multi-Agent NLP

Researchers have experimented with multi-agent setups in NLP, ranging from negotiation tasks to collaborative puzzle-solving. Many efforts, however, do not strongly enforce a single winner–loser outcome or require the full breadth of natural language. Instead, they may focus on truncated dialogues, single-turn interactions, or assume cooperative agents. Here, the adversarial angle is especially critical, because it tends to stress-test the model’s reasoning in ways that cooperative tasks might not.

2.2 The Adversarial Taboo Game

Basic Mechanics

Inspired by the classic board/card game Taboo, the Adversarial Taboo game (as introduced in the paper) revolves around a hidden target word . One player—called the Attacker—knows but is forbidden from speaking it directly. Meanwhile, the Defender, who does not know , tries to guess it before accidentally saying it.

- Hidden Target Word: Drawn from a set (often a large vocabulary of common words).

- Attacker’s Role: Induce the Defender to inadvertently say the target word. The Attacker can describe or hint at related concepts without uttering itself.

- Defender’s Role: Infer the hidden word without blurting it out by mistake. When confident, the Defender can formally guess by saying, “I know the word! It is ”

- Turns & Termination: The conversation proceeds for a pre-set maximum number of turns . If no one wins by then, the game is a tie.

Because each player’s objective strictly opposes that of the other, the game is naturally zero-sum. Crucially, each side must engage in strategic reasoning: the Attacker to trick or mislead, and the Defender to deduce, all while neither can overtly break the rules.

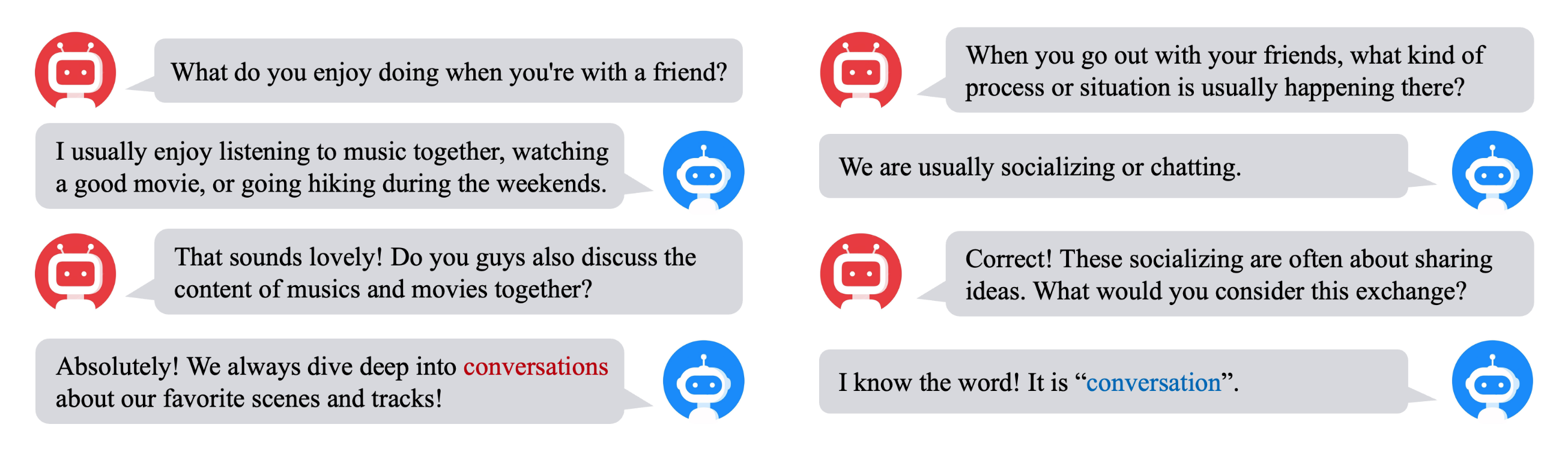

Figure 2: examples of Adversarial Taboo dialogues with the same target word “conversation.” One dialogue illustrates an attacker-winning scenario, while the other shows a defender-winning scenario.

Why Taboo?

Unlike simpler tasks such as “guessing a single entity with yes/no questions,” the Taboo game fosters richer conversations. The Attacker can talk about peripheral contexts—stories, analogies, tangential hints—trying to steer the Defender into uttering the forbidden word. The Defender, meanwhile, must figure out the word from context but avoid stating it thoughtlessly. This interplay demands semantic flexibility and high-level reasoning.

2.3 Modeling the Game as a Markov Game

In formalizing Adversarial Taboo, the paper adopts a Markov game (also known as a stochastic game) perspective, which generalizes single-agent Markov decision processes (MDPs) to multi-agent settings. A Markov game is defined by a tuple :

- State Space ():

Each state contains the conversation history and, for the Attacker’s internal state, the hidden target word . Specifically, the game’s states alternate between:

- , which might include “the current target word , all prior utterances, and whose turn it is.”

- , an intermediate representation after an Attacker’s move, but before the Defender replies (or vice versa).

-

Action Space ():

The set of all possible natural language outputs. Formally, an action might be any token sequence from a vocabulary . This is massive compared to board game actions, hence the challenge in language-based RL is significantly higher. -

Transition Function ():

Since the conversation is essentially deterministic, appends the chosen utterance to the dialogue state. After the Attacker’s turn, the game transitions to an intermediate state where the Defender moves next. -

Reward Function ():

A zero-sum structure is imposed:

- If the Attacker wins (the Defender inadvertently says the word or makes a wrong guess), the Attacker’s total reward is and the Defender’s is .

- If the Defender correctly identifies the target word without uttering it unconsciously, the Defender’s total reward is and the Attacker’s is .

- A tie yields 0 for both.

In a zero-sum setting, the Attacker aims to maximize , the sum of rewards over the trajectory , whereas the Defender strives to minimize (equivalently, maximize ).

Trajectory Probability

A single episode of the game, or trajectory, can be denoted . The probability of under policies (Attacker) and (Defender) is:

where is the intermediate state after the Attacker’s move but before the Defender’s, and vice versa. Training the model then involves finding and that optimize the expected returns according to the reward function .

2.4 The Dual Roles: Attacker vs. Defender

The notion of two specialized policies is pivotal:

- Attacker’s Policy ():

- Receives the target word .

- Must communicate in a way that presses the Defender toward uttering without explicitly saying it.

- A single slip-up (saying the target word) yields an automatic loss.

- Defender’s Policy ():

- Has no direct knowledge of but can glean hints from context.

- Seeks to guess once certain, or survive all turns without being tricked.

- Must avoid accidentally stating the target word during normal conversation.

Because the same LLM is used to implement both policies, the Attacker and Defender effectively share core language knowledge. However, at each step, they use different prompts or role instructions to shape their objectives (one to induce, the other to evade and infer). This design is crucial: it ensures that improvements in one role push the other role to become more robust, fueling a cycle of mutual adaptation.

Advantages of a Zero-Sum Setting

- Clear Objectives: Each player’s success is the other’s failure, creating direct competitive pressure.

- Automatic Labels: The winner or loser is trivially determined by game outcomes (e.g., who guessed or said the word first), eliminating extensive human labeling.

- Potential for Exploration: Since the Attacker can attempt diverse strategies (e.g., subtle hints, indirect references, misleading statements), the Defender must continuously refine its inference—and vice versa.

Tie vs. Forced Conclusion

In principle, the game can end in a tie if neither player wins within turns. Ties, though not a typical outcome in standard Taboo, motivate each side to act sooner:

- The Attacker tries to corner the Defender into an early mistake.

- The Defender might guess only when it has sufficient evidence, ideally in fewer turns to avoid additional risk.

Thus, even a tie outcome can offer learning signals (no side overcame the other), and the training process uses these episodes to adjust policies accordingly.

Summary of Section 2

- Adversarial Language Games were introduced as a powerful conceptual framework for driving emergent behavior, especially under conditions of partial or hidden information.

- The Adversarial Taboo game establishes a hidden-word scenario that requires nuanced strategic reasoning, building upon the general idea of Taboo but making it zero-sum.

- Markov Game Modeling formalizes the game’s states, actions, transitions, and rewards, yielding a structure amenable to reinforcement learning.

- The two-role system (Attacker vs. Defender) underscores the central tension: each agent’s best move depends on the other’s future strategy. This interplay encourages stronger general reasoning abilities in an LLM when self-play is applied.

Having introduced the game framework, Section 3 will detail how the LLM is trained for this two-player environment. It covers imitation learning from GPT-4, followed by self-play to refine policies via reinforcement learning.