📄 Khan et al. (2025) MutaGReP: Execution-Free Repository-Grounded Plan Search for Code-Use (arX⠶2502.15872v1 [cs⠶CL])

Part 3 of a series on MutaGReP — a strategic approach to library-scale code generation.

In this section, we reveal the engine that drives each plan from rough concept to polished solution. We look at how the system searches possible mutations, grounds each step in concrete symbols, and assigns a ranking to ensure that only the most promising plan remains. Balancing breadth of exploration with careful filtering, this process refines every subtask and sub-solution until it meets the user’s needs.

6: Successor Function: Mutating Plans with an LLM

The successor function is responsible for proposing new candidate plans given a current plan. In classical search terms, it expands a node into its children. MutaGReP’s successor function is powered by an LLM, which is prompted to mutate the current plan into one or more improved or extended plans. This is a novel use of the LLM: instead of generating code directly, the LLM produces a refinement or extension of a structured plan (a sequence of intents).

There are two modes for how the LLM can mutate a plan, corresponding to two successor function variants studied in the paper:

-

Monotonic successor: This version can only add new steps to the end of the plan. It treats the current plan as fixed and appends one additional step (intent) to it, leaving existing steps unchanged. Monotonic expansion means the plan grows step by step in a linear fashion (similar to how a person might progressively outline more steps without revising earlier ones).

-

Unconstrained successor: This version can modify any part of the plan. The LLM is allowed to add new steps, delete steps, or refine/alter the text of existing step intents anywhere in the plan. In other words, it can perform arbitrary edits to transform the current plan into a new plan.

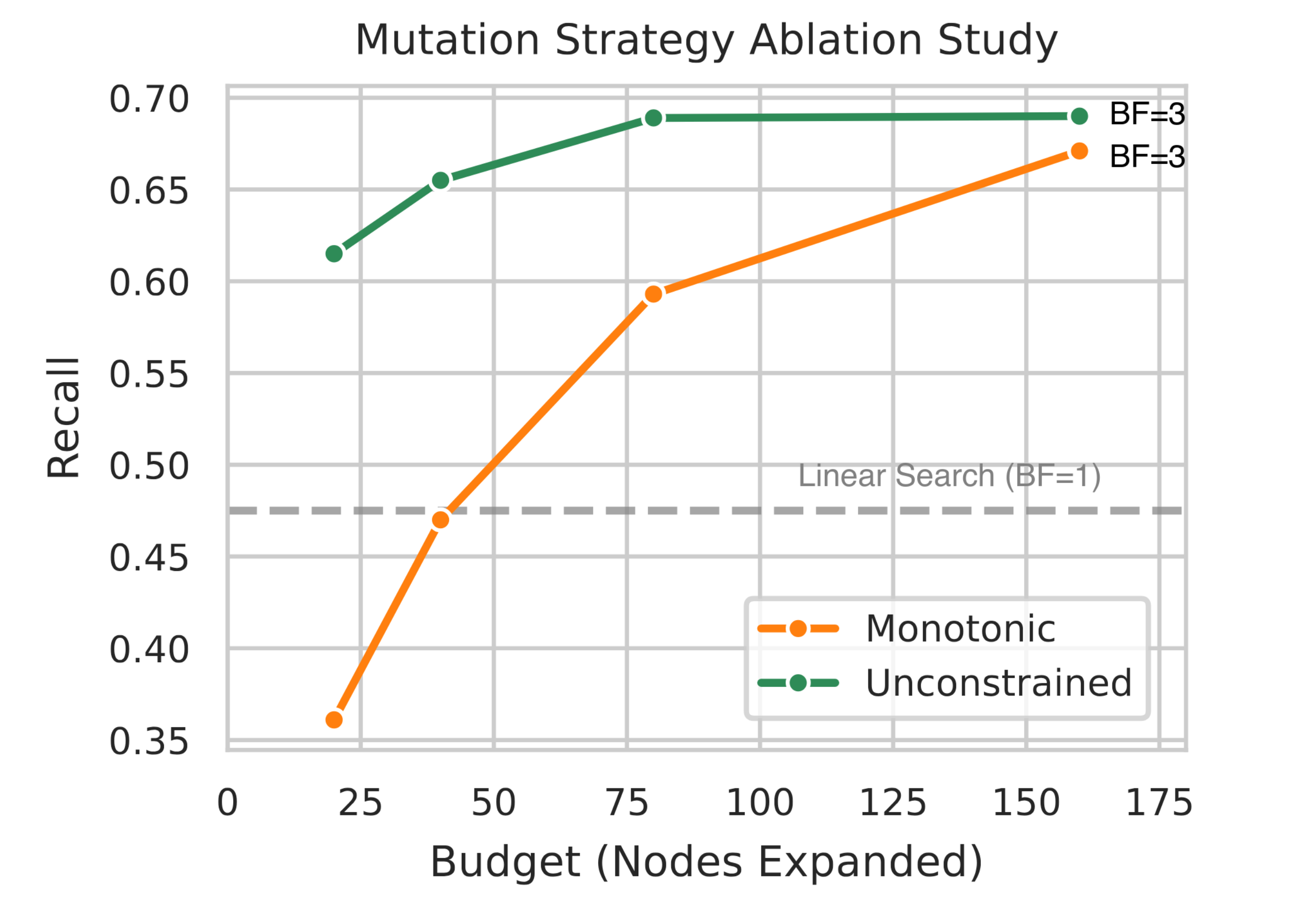

The unconstrained mode is more flexible – it lets the LLM rethink earlier steps if needed or insert steps in between. The monotonic mode is simpler and ensures a non-decreasing number of steps. The authors hypothesized (and confirmed) that unconstrained mutation would explore the space more efficiently, at the cost of a more complex prompt for the LLM. In their ablation, unconstrained mutation consistently outperforms monotonic for a given search budget, especially when the budget is small.

Figure 5 highlighted this: unconstrained successor yields much higher recall of relevant symbols than the monotonic approach, indicating it found better plans with fewer expansions. Intuitively, being able to revise plans helps correct mistakes early and incorporate new ideas without starting over.

How does the LLM know how to mutate a plan? This is handled by carefully designed prompts (detailed in Appendix C of the paper) that instruct the LLM to act as a planning assistant. For example, the prompt might provide the user’s request and the current plan (steps and known symbols) and then say something like: “Propose an improved plan. You may add, remove, or modify steps as needed to better solve the user’s query using the repository’s functions. Respond with the new plan.” For the monotonic variant, the prompt might restrict the instruction to only add one more step.

The exact prompt templates are given in the appendix and are implemented in the code as Jinja2 templates stored in multiline strings (e.g., PROMPT_TEMPLATE). The implementation parses the LLM's output using XML parsing with the xml.etree.ElementTree library, extracting structured step information with numbers and descriptions. For the unconstrained successor function, the system provides context about the repository through a string representation of the repository tree structure and a list of starting symbols, which are included in the prompt to give the LLM awareness of available functionality.

According to the appendix, the authors used OpenAI’s GPT-4 (denoted GPT-4o) for guiding plan mutations, and also mention a smaller model “GPT-4o-mini” was used for generating synthetic intents (more on that later). GPT-4’s strong language understanding helps ensure that the mutated plans remain coherent, relevant to the query, and plausible with respect to the repository.

The output from the LLM is parsed to extract the new plan steps. In implementation, this could be as simple as reading the LLM’s message which might list the steps in order (the code might parse lines prefixed by numbers or bullet points as steps). The new plan (child node) is then represented as a list of PlanStep objects. Any new or changed step intents will need to be grounded in code symbols (since the LLM typically only produces the NL intents, not the symbol lists). That grounding is done by the symbol retriever component, which we discuss in the next section. For example, if the current plan had steps 1 and 2, and the unconstrained successor decides to refine step 2’s intent and add a step 3, then after getting the new intents from the LLM, the system will call the symbol retriever to find symbols for the modified step 2 and for the new step 3. This yields a complete new plan (with intents and symbols for every step) that becomes a node in the search tree.

It’s important that the prompt for the LLM’s successor function gives it knowledge of which symbols are available in the repo. The implementation likely provides some context about the repository – possibly a list of relevant API signatures or the previously retrieved symbols for each step. However, the paper emphasizes that the symbol suggestions themselves come after the LLM proposes intents. So the LLM may propose an intent without knowing the exact symbol, expecting the retrieval to fill it in. To guide it, the prompt may remind it of what symbols exist (perhaps via a brief summary of the repo or examples of symbol names). In Appendix C, they include the full prompts; integrating those, the code might load a template and format it with the current plan, user query, etc., then call the OpenAI API via the openai Python package.

In summary, the successor function is where planning knowledge is injected via the LLM. Rather than brute-forcing all possible next steps, the LLM can use semantic understanding to propose sensible plan modifications. The open-source code encapsulates this logic likely in the plan_search.successor_functions subpackage. For example, one might find classes or functions like MonotonicSuccessor and UnconstrainedSuccessor there, each implementing a method like propose_successors(plan) using a different prompt. The code would call the selected successor function each time a node is expanded. Notably, this design is modular – one could plug in a different LLM or adjust the prompt without changing the rest of the search algorithm. The researchers even mention evaluating two successor strategies; thus the implementation likely makes it easy to switch between them (via a config or parameter). The example usage in the README suggests you can specify --successor_function as “unconstrained” or “monotonic” in the plan search script.

After the LLM proposes new plan intents, the grounding step kicks in to fetch code symbols for those intents. We will cover that next, as it’s tightly coupled to the successor function – together they realize the mutation-and-grounding cycle depicted in Figure 4.

7: Symbol Retriever: Grounding Intents in Code

After the successor function proposes a new or changed step intent, MutaGReP must ground that intent in the actual codebase. The symbol retriever (grounding function) does this by finding relevant code symbols for a given text query (the step intent). This is implemented as a semantic vector search: embedding the intent as a query, retrieving the most semantically similar symbols from the repository, and using those as grounding for the step.

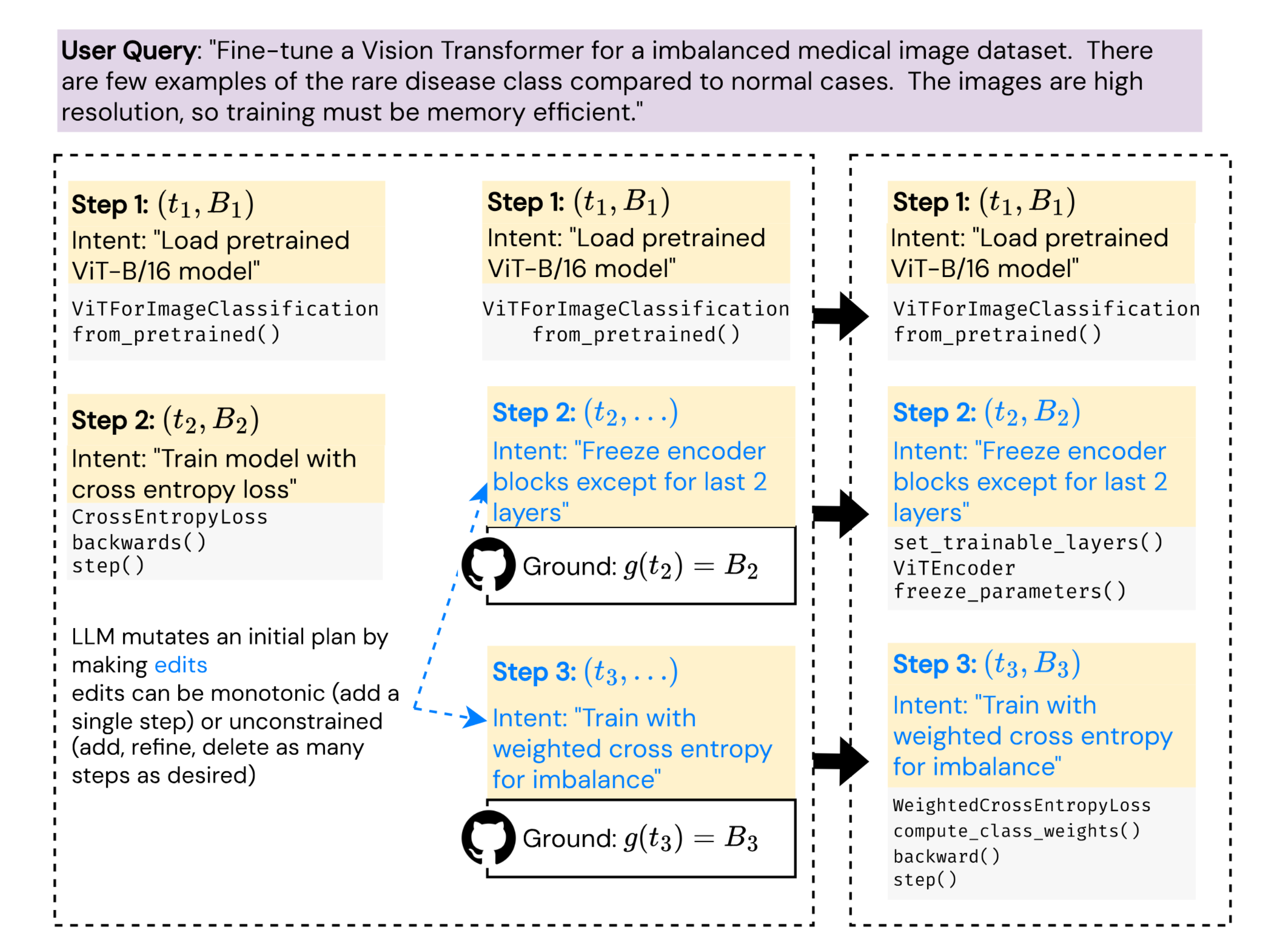

Figure 4 from the paper illustrates this mutation-and-grounding process.

Figure 4 shows how the grounding function maps modified intents to code symbols. In the example, an initial plan is mutated: Step 2's intent is refined and a new Step 3 is added. The grounding function then takes the updated intents ( and ) and retrieves a set of symbols ( and ) for each, drawn from the repository. These symbols might include function names like

WeightedCrossEntropyLoss.compute_class_weightsor class methods likeViTEncoder.freeze_parameters– whatever the retriever deems relevant to the intent "Train with weighted cross entropy for imbalance" or "Freeze encoder blocks except last 2 layers" in that example.

The symbol retriever is vital because it constrains the search to feasible plans. Without it, the LLM might hallucinate steps that sound reasonable but refer to nonexistent functions. By immediately grounding every step in actual repo symbols, MutaGReP ensures that plans remain tied to reality (the codebase). As the paper notes, this keeps the search within the space of realizable plans. If an intent can't retrieve any relevant symbol, that plan likely won't score well or may be pruned, since it indicates the step might not be implementable with current code.

MutaGReP's implementation uses two primary modules for the symbol retrieval functionality: plan_search/symbol_retrievers/ which implements the core vector search capabilities, and plan_search/code_search_tools/ which leverages these retrievers to connect intents with code symbols. The codebase includes two symbol retriever implementations:

OpenAiVectorSearchSymbolRetriever(inopenai_vectorb.py): Uses OpenAI's embedding model to perform semantic vector searchBm25SymbolRetriever(inbm25_simple.py): Uses a BM25 algorithm for keyword-based retrieval

The system integrates two vector database backends:

- LanceDB: An efficient local vector store built on Apache Arrow, used through the PydanticLancedbVectorDatabase class

- Qdrant: Although mentioned in requirements, the primary implementation uses LanceDB, which integrates with Pydantic models

The OpenAiVectorSearchSymbolRetriever class shows how the system embeds and indexes symbol records:

def index(self, embeddables: Sequence[Embeddable[Symbol]]) -> None:

self.vector_database.insert(embeddables)

def __call__(self, queries: Sequence[str], n_results: int = 5) -> Sequence[RetrievedSymbol]:

retrieved_embeddables: list[RetrievedEmbeddable[Symbol]] = []

for query in queries:

retrieved_embeddables.extend(self.vector_database.search(query, n_results))

# ...process and return results

When a query comes (like a plan step's intent), the retriever uses OpenAI's text embedding model (specifically text-embedding-3-large, as noted in Appendix A) to get its vector representation. The nearest-neighbor search is then performed against the vector index using the database's search functionality. The DirectIntentSearchTool class in code_search_tools/direct_intent_search.py shows that the system retrieves exactly 5 symbols per intent by default:

def __init__(self, symbol_retriever: SymbolRetriever, symbols_to_retrieve: int = 5):

self.symbol_retriever = symbol_retriever

self.symbols_to_retrieve = symbols_to_retrieve

The more sophisticated OpenAiOneStepCodeSearchTool implements caching for repeated queries and uses a two-step process:

1. It first generates keywords from the intent using an LLM

2. It then retrieves symbols using those keywords and filters them with another LLM call

For steps that existed before in the parent plan and were unchanged by the mutation, the implementation in UnconstrainedXmlOutputSuccessorFunction.__call__ shows that the system performs re-grounding for every step in the new plan. It iterates through all steps in proposed_successor.parsed_steps and calls the search tool for each one, regardless of whether they were changed or not:

# Ground each step in the proposed plan

grounded_steps: list[PlanStep] = []

for step in proposed_successor.parsed_steps:

search_result = self.search_tool(step.description)

grounded_step = PlanStep(

index=step.step_number,

content=step.description,

search_result=search_result,

)

grounded_steps.append(grounded_step)

The symbol retriever module effectively turns MutaGReP into a planning-over-knowledge problem: the knowledge base is the set of symbol descriptions indexed by the vector DB. By pulling out the right pieces of knowledge for each step, it ensures the plan is actionable. As the authors put it, the search is constrained to the space of realizable plans by this grounding mechanism.

8: Plan Scoring and Ranking Mechanisms

Not all plans are created equal – some will solve the user’s request more completely or efficiently than others. MutaGReP uses a scoring function to estimate the quality or promise of a plan . This scoring serves two purposes:

- If using an informed search (like best-first search), the score guides which node to expand next (always expand the highest-scoring plan in the frontier).

- After search, the scores help choose the final plan(s) to output to the code generation stage. Designing a good scoring function is challenging because we need to predict if a plan will lead to correct code without actually executing the plan.

The paper explores multiple scoring strategies, and the code is likely structured to allow plugging these in (perhaps implemented in plan_search.rankers module). The main variants are:

-

Symbol Diversity Score: a simple heuristic that counts the number of unique symbols used in the plan. The idea is that a plan covering a broader set of relevant symbols might be more likely to solve the task, as it integrates multiple components of the codebase. Formally, if a plan has steps with symbol sets , the diversity score could be (the total count of distinct symbols in the plan). This encourages using more of the available functionality. It’s a cheap calculation and doesn’t require any LLM calls. However, it might favor bloated plans that include irrelevant symbols, so it’s a rough baseline.

-

Decomposed Likert Score: a more sophisticated approach that uses an LLM as a judge. The plan (with its steps and symbols) and the user query are given to an evaluation prompt, asking the LLM to rate the plan on certain criteria. Specifically, the paper uses a 7-point Likert scale for two aspects: (i) plan-level accuracy – does the plan as a whole seem to solve the user’s request? and (ii) step-level feasibility – for each step, is the intent actually achievable with the provided symbols? The LLM (for example, GPT-4) returns a score for the overall plan (let’s call it ) and scores for each step. These are then aggregated into a single score. During search, they use a combined metric

which averages the step feasibility and plan success judgments. When the search is finished and they need to pick the best plan, they actually sort first by plan-level score , breaking ties by average step score. This two-tier sorting ensures that a plan that directly addresses the query (high ) is preferred, using step scores as a secondary criterion. The use of an LLM for scoring is akin to having a very knowledgeable code reviewer evaluate the plan without running it. It’s much more computationally expensive than the diversity heuristic (each scoring involves an LLM API call), but presumably they might only use it for final ranking or for moderate numbers of nodes due to cost. The authors cite that this approach draws inspiration from work on decomposed evaluation metrics improving reliability.

- Oracle Score: for research analysis, they define an “oracle” metric based on ground truth code usage. If they know the actual solution uses certain symbols, they can score a plan by how many of those ground-truth symbols it covers (essentially recall). This isn’t usable in a real scenario (because it requires knowing the solution), but it’s used in experiments to compare how well different methods recover the true relevant symbols. For example, in the ablation plots (like Figure 5), they used the oracle recall as the performance metric when comparing successor functions, to have an objective measure. In code, this might not be implemented except maybe as an offline evaluation tool.

The open-source implementation likely includes at least the diversity and LLM (Likert) rankers. Possibly they default to diversity for simplicity, but the paper’s results show they did use the Likert scorer for final plan selection and also in an informed search scenario. The README’s example command run_plan_search_for_repo.py has a --use_any_ranker (as per text “…use any ranker.”), implying the user can toggle which ranking function to use. They might have presets like --ranker diversity or --ranker likert. The code in plan_search.rankers might have classes like SymbolDiversityRanker and LLMScoreRanker. The LLM ranker would internally call the OpenAI API with a prompt (the prompt templates for this are given in Appendix D). The appendix mentions they provide the prompts used for scoring in Appendix D, meaning the exact phrasing given to GPT-4 to obtain those Likert judgments is documented.

One interesting result from the paper: they compared plans chosen by diversity vs by Likert scoring. Table 3 shows that while the diversity scorer might achieve slightly higher overlap metrics, the Likert scorer’s chosen plans were preferred by an LLM judge 65% of the time. This suggests that the Likert scorer leads to plans that more directly address the query (fidelity), even if they might use slightly fewer symbols. In practical terms, the Likert scorer likely yields better final code. Thus, the system-level evaluation used the LLM-based scoring to pick the final plan provided to the code generator (especially since they later feed plans to models like GPT-4 or Qwen).

From an implementation perspective, using the Likert scorer within the search can be heavy. If they used it for every node expansion in best-first search, that could blow up API usage. They might have mitigated this by, for example, only scoring with LLM for the top few contenders or at the end. Another approach: use the cheap diversity score during expansion (to guide the search roughly), but then use the LLM scorer on the final set of plans to choose the best. The text hints that during search they did use the LLM score (Equation 2) for informed search. If so, they likely limited the number of nodes or did batched scoring. It’s possible they tried both extremes (one experiment might have run with oracle scoring to simulate perfect guidance, another with diversity, another with Likert). The code might allow such combinations for research, though a user of the library might stick with one.

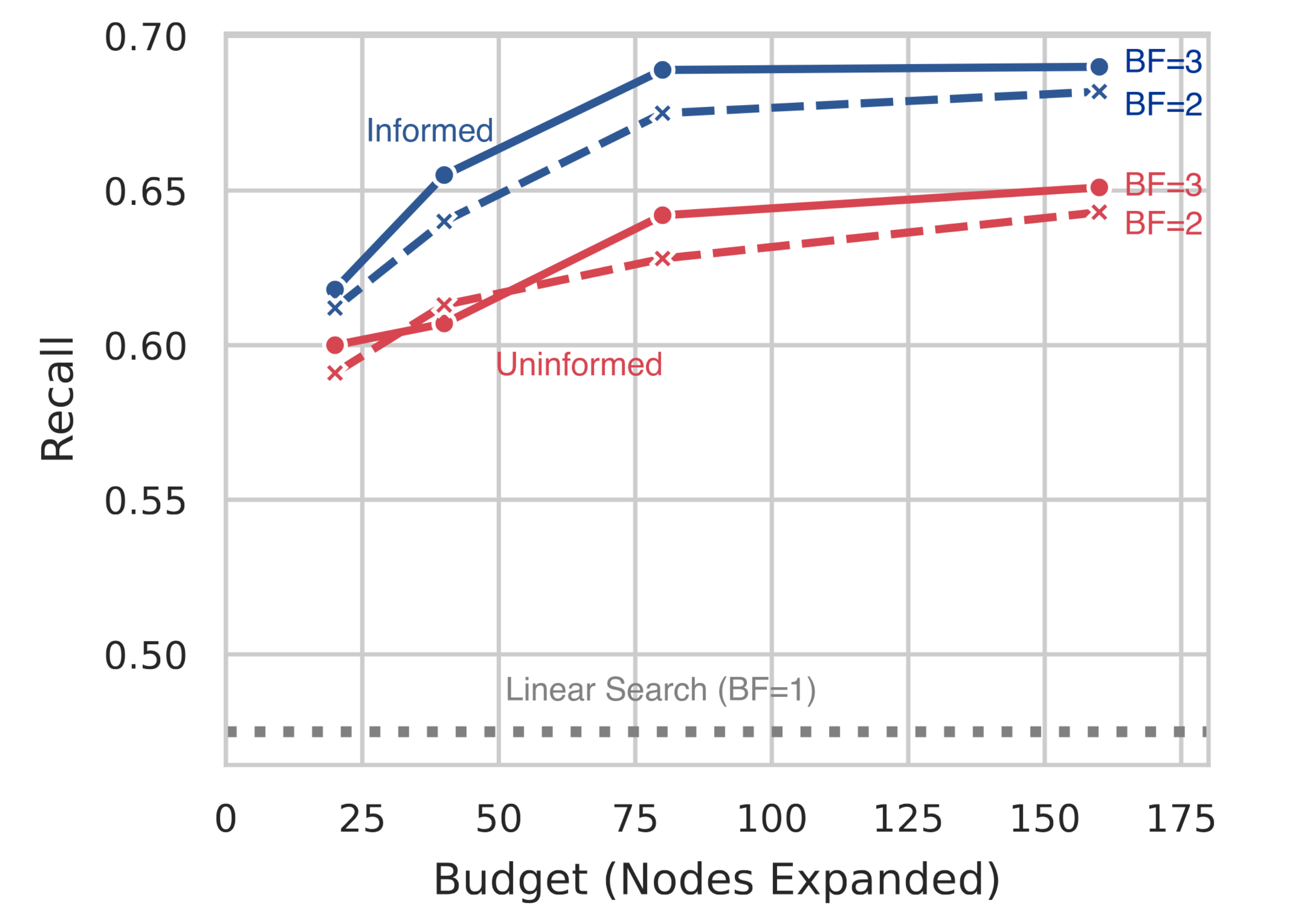

Finally, whichever scoring function is chosen, the search algorithm uses it to rank nodes. In best-first (A*-like) search, you select the node with highest next. In depth-first, you ignore except maybe at the end. They also mention not using breadth-first because it’s inefficient in this context. The experiments confirmed that informed search (with a good heuristic) outperforms uninformed (DFS) and the linear search baseline (ReAct style).

Figure 6 shows a comparison of search strategies: best-first search finds better plans than depth-first, and a higher branching factor (3 vs 2) yields better results, especially for the informed search. This demonstrates the benefit of the scoring function in guiding exploration.

In code, the ranker would be invoked each time a new plan node is generated (to assign it a score), and also possibly re-invoked if an existing plan is modified (though in this tree search, once a node’s created, its score is fixed until maybe we rescore with a different metric at the end). The SearchResult that comes out at the end could include the final best plan and its score. The example snippet in README shows they load plan_search_result_example.json and parse it into SearchResult with Pydantic, which likely contains the chosen plan and maybe some search metadata.

In summary, ranking in MutaGReP is a mix of lightweight heuristics and LLM-based evaluation. The code design reflects this by separating rankers as pluggable components in the plan search pipeline. A user or researcher can choose a ranker depending on the desired balance of speed vs. thoroughness. For a quick run, one might use symbol count; for the highest fidelity plan, one might use the LLM judge. The provided implementation likely defaults to one of them but can be configured. This modular design again underscores the framework nature of MutaGReP: it’s not just a fixed algorithm, but a combination of interchangeable parts (successor function types, ranker types, search strategies) that together define a plan search system.