📄 Khan et al. (2025) MutaGReP: Execution-Free Repository-Grounded Plan Search for Code-Use (arX⠶2502.15872v1 [cs⠶CL])

Part 2 of a series on MutaGReP — a strategic approach to library-scale code generation.

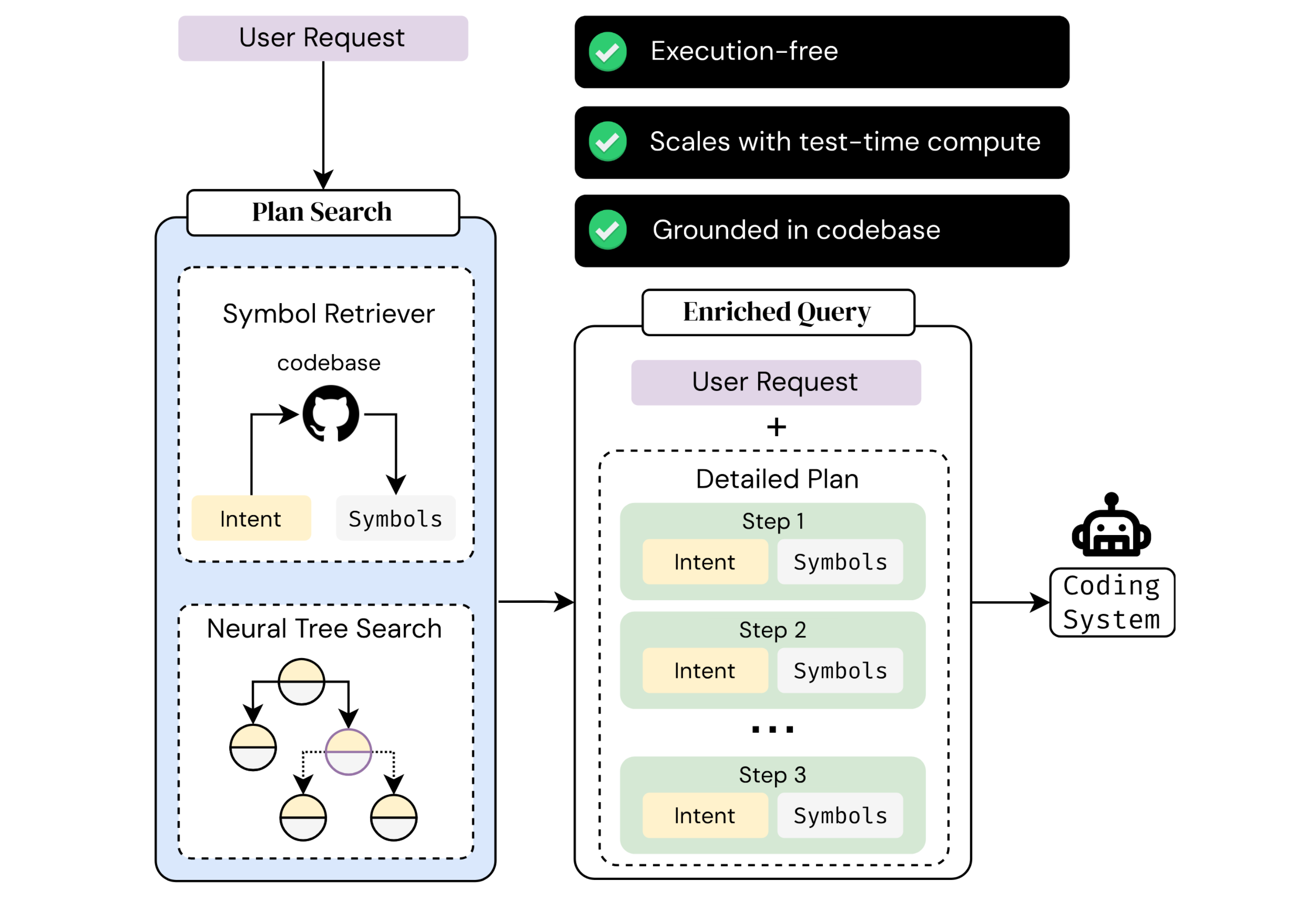

In this section, we explore how each step of a MutaGReP-generated plan is formed and grounded. You'll see how natural language "intents" link directly to actual functions and classes, ensuring plans remain both human-readable and executable. By bridging abstract solution steps with concrete repository symbols, the process guarantees each step is anchored to relevant, usable code.

3: Overview of the MutaGReP Approach

MutaGReP (Mutation-Guided Grounded Repository Plan Search) is a system that transforms user requests into detailed, repository-grounded plans. Each step in a MutaGReP plan consists of:

- A natural language intent, describing a specific action or subtask.

- A set of code symbols (functions, classes, methods) from the repository relevant to that intent.

The end product is an "enriched query"—the original request combined with a structured, detailed plan. This enriched query can be directly passed to code-generation models (e.g., GPT-4), dramatically improving code quality without requiring full repository context.

Figure 1: MutaGReP workflow, showing how user requests become structured plans grounded in repository symbols.

Experiments on benchmarks like LongCodeArena showed that MutaGReP can match or exceed GPT-4's full-context performance using less than 5% of the context size, enabling even smaller models (e.g., Qwen-2.5) to achieve GPT-4-level performance.

MutaGReP achieves this with four key components:

- Symbol mining and indexing: Efficient retrieval of repository symbols.

- Structured plan representation: Linking natural language intents with code symbols.

- Neural tree search: Guided by an LLM to iteratively build and refine plans.

- Integration with code-generation models: Final conversion of plans into executable code.

Critically, MutaGReP performs planning solely in natural language and metadata; no code execution or simulation is required.

4: Plan Representation: Intents and Code Symbols

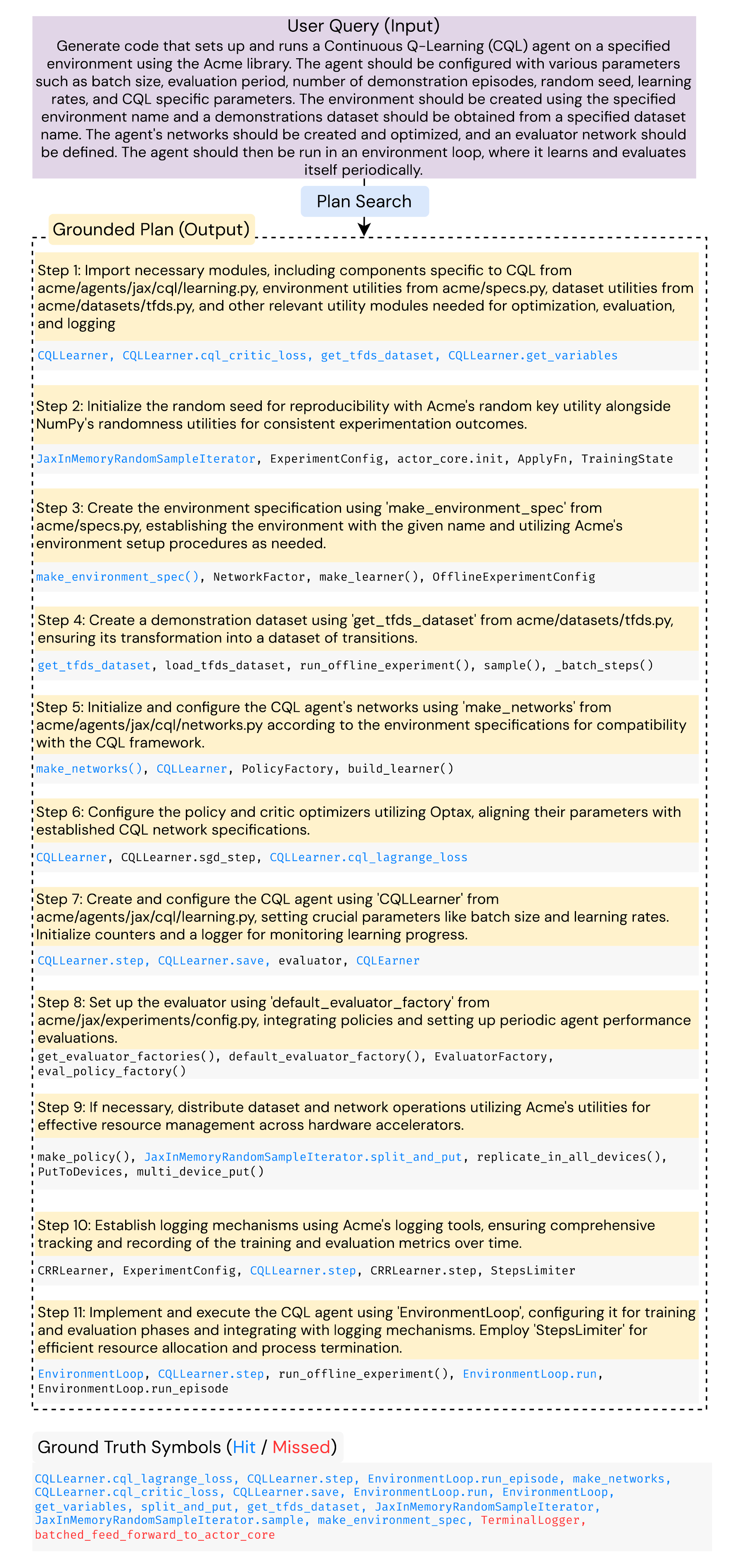

MutaGReP plans are sequences of plan steps, each comprising:

- An intent: A human-readable action or goal (e.g., "Load a pretrained ViT model").

- A set of grounded symbols: Repository-specific functions or classes to implement that intent (e.g.,

ViTForImageClassification.from_pretrained).

By grounding each step directly in real code symbols, the plan becomes immediately actionable for implementation.

Figure 2: Example of a MutaGReP-generated plan for a LongCodeArena task. Each intent is accompanied by relevant code symbols.

Every plan step explicitly uses repository symbols, differentiating MutaGReP from generic outlines. The plan thus represents a feasible solution grounded directly in available repository APIs, significantly simplifying subsequent code generation.

Plans are internally represented using structured data models (e.g., Pydantic) to ensure consistency, serialization, and ease of use across different components (successor function, symbol retriever, and ranker).

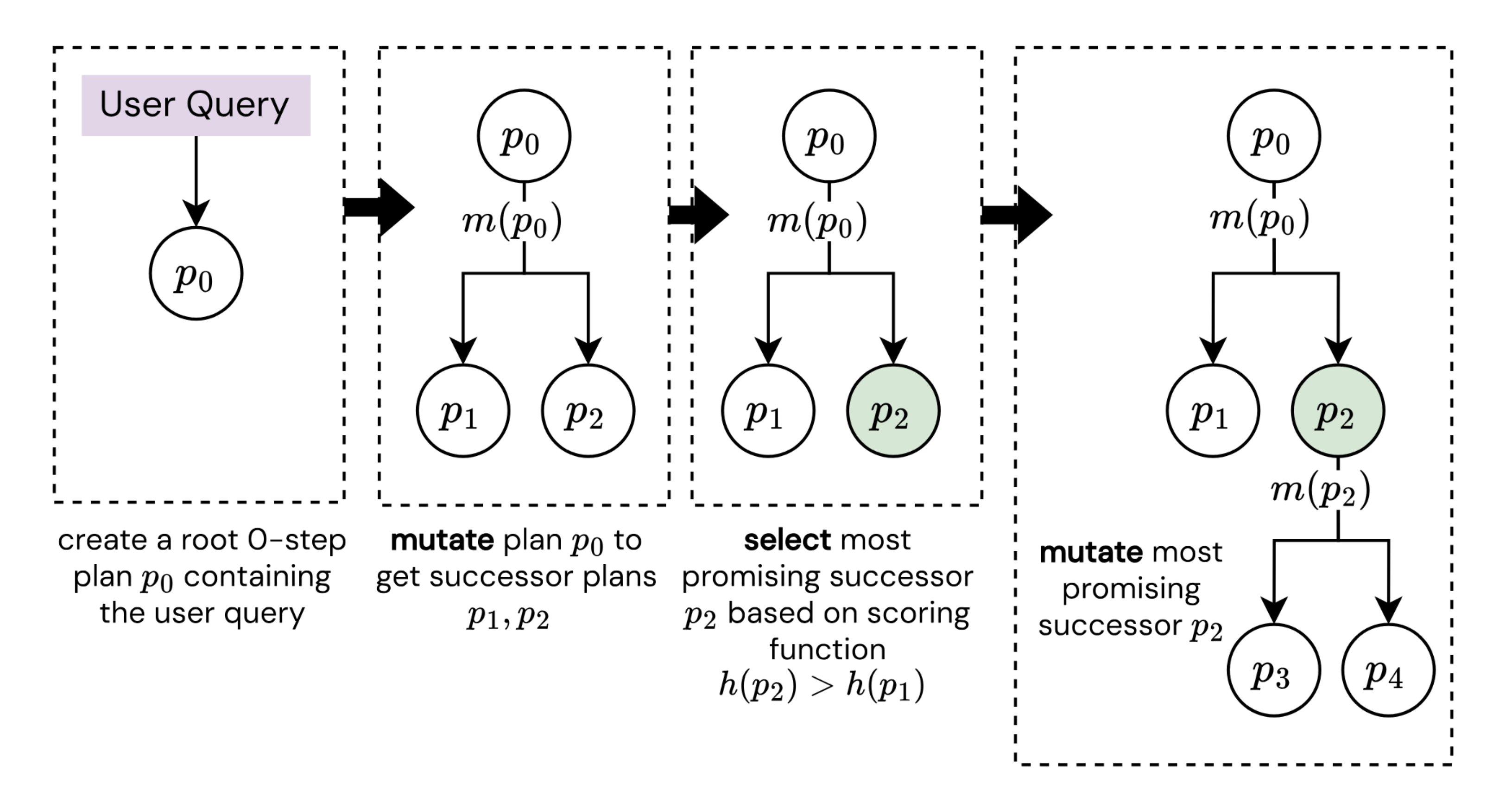

5: Neural Plan Search Framework

MutaGReP employs a neural-guided tree search to generate and refine plans. Beginning with an empty plan and user query, the search explores possible plan expansions using an LLM-based successor function and evaluates them with a heuristic ranking function.

Figure 3: Neural tree search expands nodes representing plans. Each node mutation generates candidate successor plans.

The search can operate in two modes:

- Uninformed search: Simple depth-first exploration without ranking.

- Informed search (best-first): Prioritizes promising plans using heuristic scoring.

Informed search generally outperforms uninformed search by focusing computational resources on higher-quality plans.

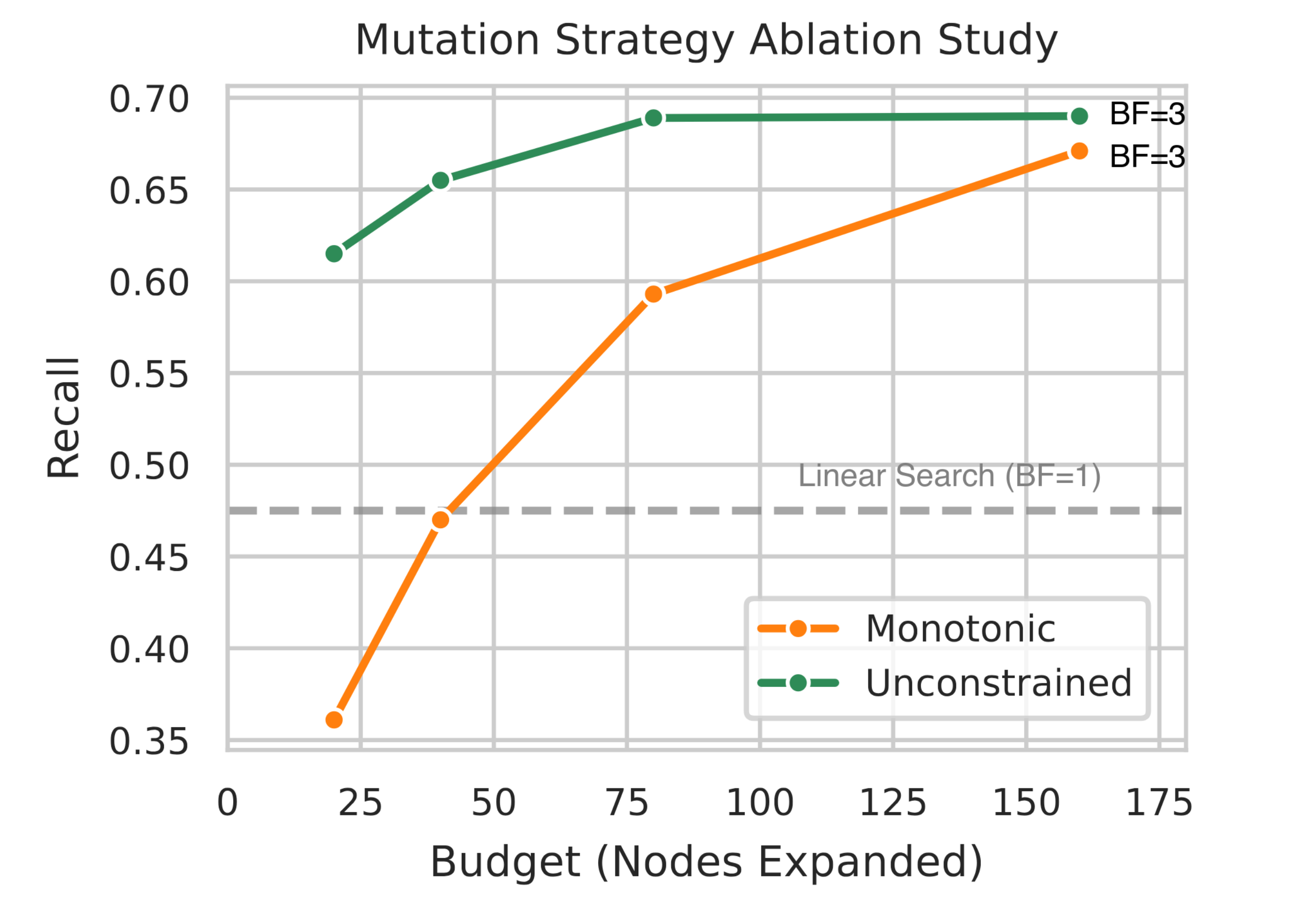

The search terminates based on criteria such as maximum depth, node expansions, or achieving a satisfactory score. Increasing the search budget consistently improves the retrieval quality of relevant symbols, especially when allowing flexible ("unconstrained") mutations that modify existing steps.

Figure 4: Increasing search budget improves symbol recall. Unconstrained mutations significantly outperform simpler monotonic expansions.

The search returns the highest-ranked plan(s), which can be immediately passed to a code-generation model. Although exhaustive optimality is not guaranteed, empirical results show that MutaGReP efficiently identifies high-quality plans using a manageable computational budget.

The search implementation relies on structured data models for clarity and reuse, facilitating easy customization or extension for different repositories or applications.