📄 Khan et al. (2025) MutaGReP: Execution-Free Repository-Grounded Plan Search for Code-Use (arX⠶2502.15872v1 [cs⠶CL])

Part 1 of a series on MutaGReP — a strategic approach to library-scale code generation.

In this section, we explore why including an entire code repository directly in a language model’s prompt is inefficient. We outline the theoretical basis for a structured, planning-driven approach that selectively provides relevant context. Along the way, we connect these ideas explicitly to prior benchmarks and methods, illustrating how selective context outperforms naïve repository inclusion.

1: Introduction and Problem Context

Large language models (LLMs) frequently generate code by leveraging APIs and functions from extensive code repositories. A straightforward but inefficient method—"code-stuffing"—includes the entire repository directly in the LLM's prompt. This approach is problematic for two main reasons: most tasks utilize only a small fraction of available symbols, and excessively long prompts degrade the model's reasoning abilities (Khan et al., 2025).

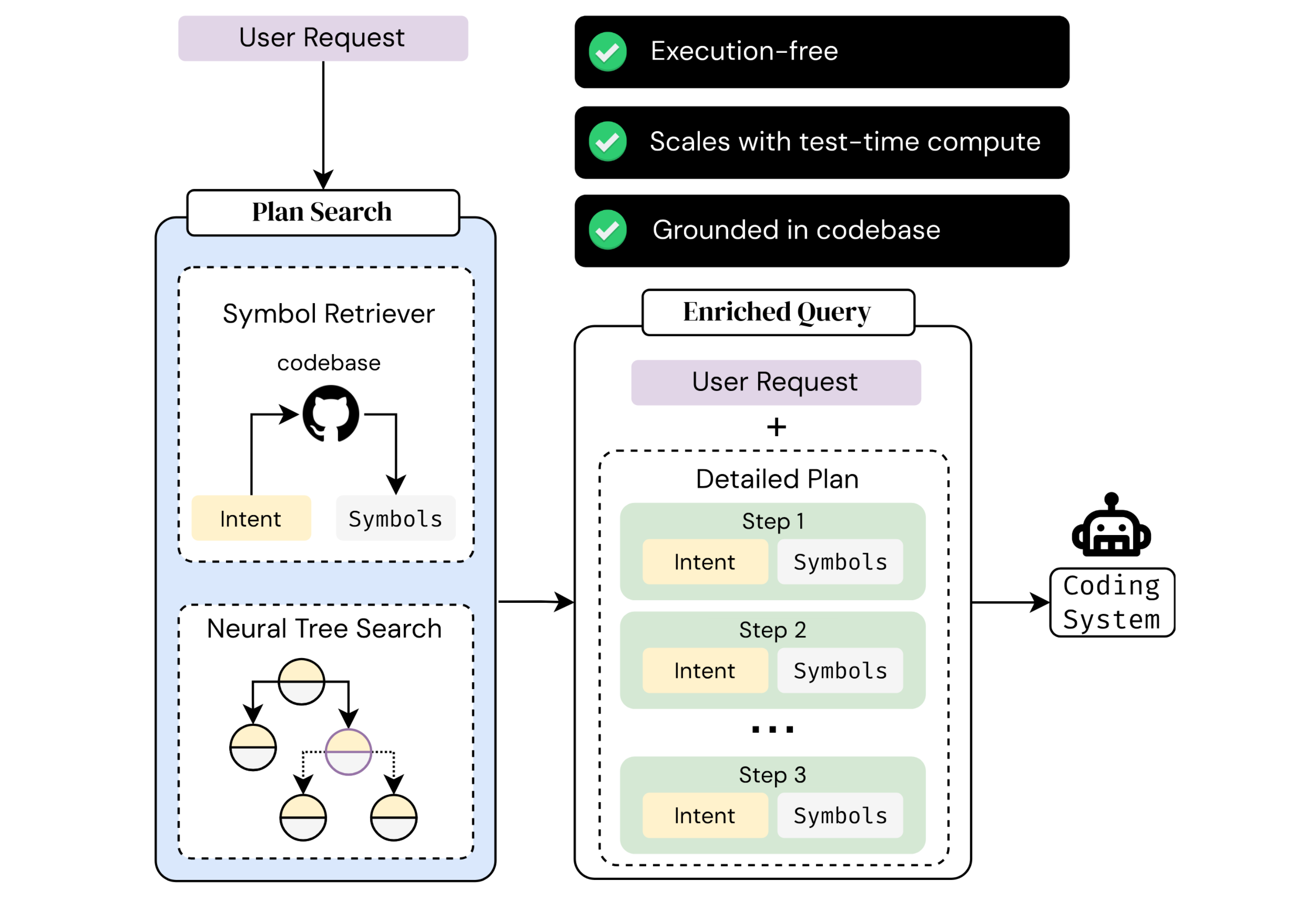

Human programmers, by contrast, typically navigate the repository first, identify relevant functionalities, and strategically plan their solution around these pieces. The research paper "MutaGReP: Execution-Free Repository-Grounded Plan Search for Code-Use" explicitly addresses this by adopting a human-inspired planning approach. It constructs step-by-step plans, each explicitly grounded in the repository's relevant symbols. This ensures the LLM receives only the essential context, structured efficiently rather than indiscriminately dumped into the prompt.

Figure 1 illustrates this structured approach: a user's query, combined with a detailed plan referencing concrete code symbols, creates an "enriched query" for subsequent code generation.

2: Background: Repository-Grounded Code Generation

Repository-grounded code generation involves leveraging existing repositories (libraries or frameworks) to assist in new code creation. Prior research has primarily explored two paths:

Software Engineering Agents

These agents iteratively edit existing codebases to fix bugs or implement new features, relying heavily on execution-based feedback such as unit tests. For example, the SWE-bench benchmark evaluates software agents by requiring them to iteratively resolve real GitHub issues through code modifications and continuous execution-driven testing.

The "Code-Use" Paradigm

In this approach, the repository is treated as a library from which relevant functionalities are selected to solve user-specified tasks. CodeNav (Gupta et al., 2024), for instance, uses keyword-based code search and an execution environment to progressively build solutions from repository symbols. However, earlier benchmarks in this domain often featured oversimplified scenarios, failing to fully assess an LLM's performance in realistic, large-scale conditions.

The LongCodeArena (LCA) benchmark fills this gap by introducing more complex, realistic tasks derived directly from example scripts provided by popular GitHub repositories. This setting pushes LLMs to intelligently identify and utilize the appropriate portions of a complex library, clearly motivating a planning-driven approach like MutaGReP.

Recent research suggests that introducing a planning step can improve code generation. Wang et al. (2024) propose PlanSearch, which searches over natural language solution plans before generating code, yielding more diverse and accurate solutions in competitive programming tasks. MutaGReP builds on this foundation by not only searching for a sequence of subtasks but explicitly grounding each step in the repository’s API. By ensuring every plan step can be implemented with existing repository symbols, MutaGReP provides a structured yet realistic context for code generation.

Another closely related approach is test-time search, exemplified by systems such as AlphaCode and CodeTree. These methods generate multiple candidate solutions and select the most effective ones based on execution feedback. Similarly, CodeMonkeys iteratively generates and tests solutions to enhance accuracy on challenging tasks. MutaGReP adopts a similar exploratory strategy but differs significantly by being "execution-free." It performs systematic tree search solely within structured plan space, avoiding the complexities and computational overhead of actual code execution. This allows it to efficiently explore possible solutions while retaining the benefits of systematic backtracking and refinement.